Effective prevention of semantic drift as angular distance in memory-less continual deep neural networks

Paper and Code

Dec 16, 2021

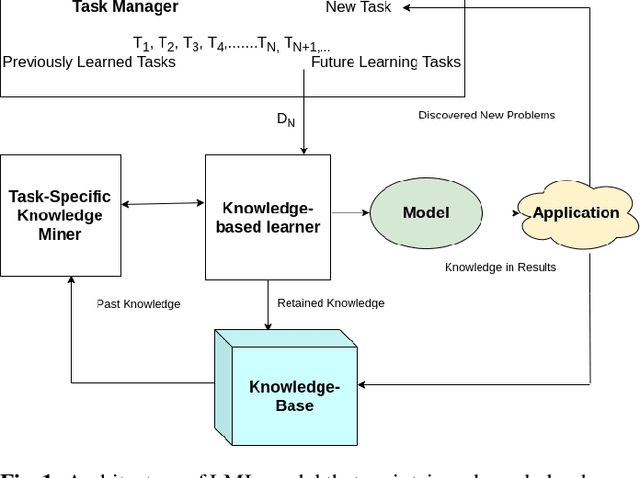

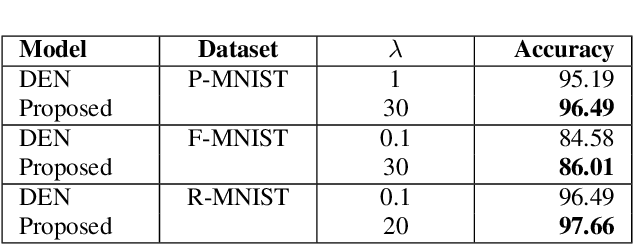

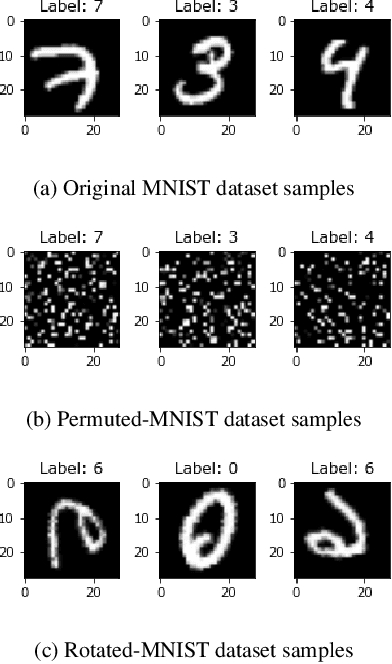

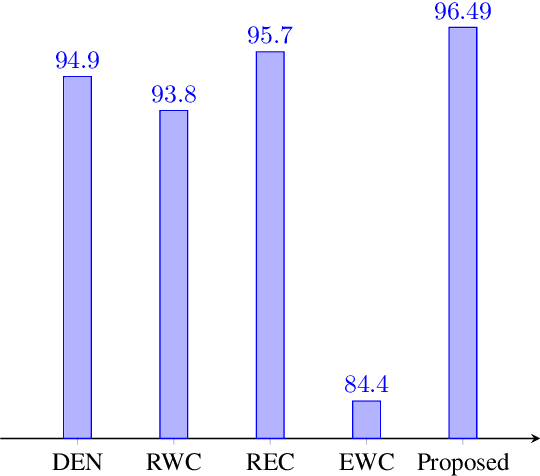

Lifelong machine learning or continual learning models attempt to learn incrementally by accumulating knowledge across a sequence of tasks. Therefore, these models learn better and faster. They are used in various intelligent systems that have to interact with humans or any dynamic environment e.g., chatbots and self-driving cars. Memory-less approach is more often used with deep neural networks that accommodates incoming information from tasks within its architecture. It allows them to perform well on all the seen tasks. These models suffer from semantic drift or the plasticity-stability dilemma. The existing models use Minkowski distance measures to decide which nodes to freeze, update or duplicate. These distance metrics do not provide better separation of nodes as they are susceptible to high dimensional sparse vectors. In our proposed approach, we use angular distance to evaluate the semantic drift in individual nodes that provide better separation of nodes and thus better balancing between stability and plasticity. The proposed approach outperforms state-of-the art models by maintaining higher accuracy on standard datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge