EEG to fMRI Synthesis: Is Deep Learning a candidate?

Paper and Code

Sep 29, 2020

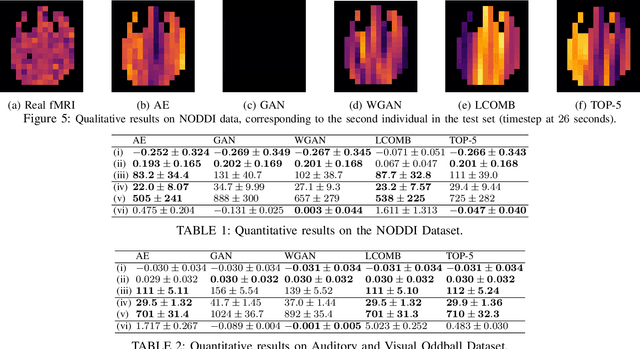

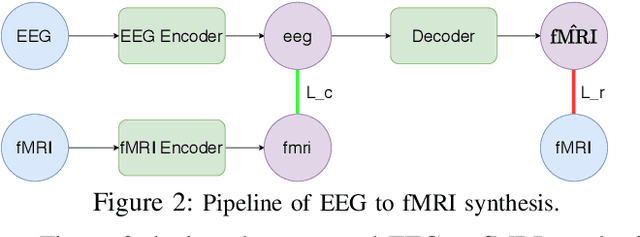

Advances on signal, image and video generation underly major breakthroughs on generative medical imaging tasks, including Brain Image Synthesis. Still, the extent to which functional Magnetic Ressonance Imaging (fMRI) can be mapped from the brain electrophysiology remains largely unexplored. This work provides the first comprehensive view on how to use state-of-the-art principles from Neural Processing to synthesize fMRI data from electroencephalographic (EEG) data. Given the distinct spatiotemporal nature of haemodynamic and electrophysiological signals, this problem is formulated as the task of learning a mapping function between multivariate time series with highly dissimilar structures. A comparison of state-of-the-art synthesis approaches, including Autoencoders, Generative Adversarial Networks and Pairwise Learning, is undertaken. Results highlight the feasibility of EEG to fMRI brain image mappings, pinpointing the role of current advances in Machine Learning and showing the relevance of upcoming contributions to further improve performance. EEG to fMRI synthesis offers a way to enhance and augment brain image data, and guarantee access to more affordable, portable and long-lasting protocols of brain activity monitoring. The code used in this manuscript is available in Github and the datasets are open source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge