EDLaaS; Fully Homomorphic Encryption Over Neural Network Graphs

Paper and Code

Oct 26, 2021

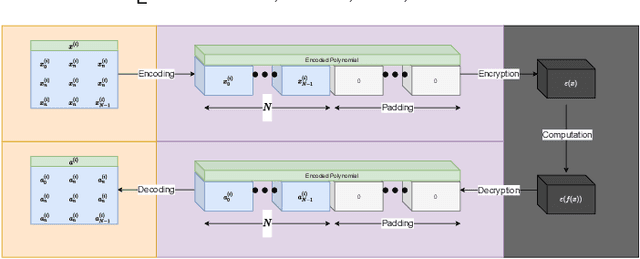

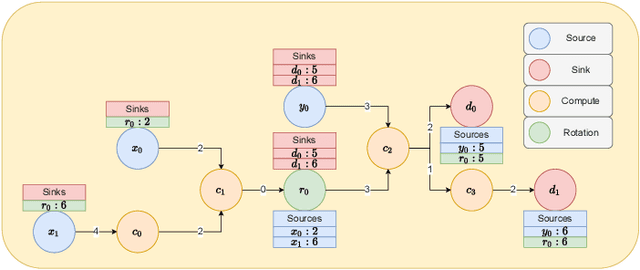

We present automatically parameterised Fully Homomorphic Encryption (FHE), for encrypted neural network inference. We present and exemplify our inference over FHE compatible neural networks with our own open-source framework and reproducible step-by-step examples. We use the 4th generation Cheon, Kim, Kim and Song (CKKS) FHE scheme over fixed points provided by the Microsoft Simple Encrypted Arithmetic Library (MS-SEAL). We significantly enhance the usability and applicability of FHE in deep learning contexts, with a focus on the constituent graphs, traversal, and optimisation. We find that FHE is not a panacea for all privacy preserving machine learning (PPML) problems, and that certain limitations still remain, such as model training. However we also find that in certain contexts FHE is well suited for computing completely private predictions with neural networks. We focus on convolutional neural networks (CNNs), fashion-MNIST, and levelled FHE operations. The ability to privately compute sensitive problems more easily, while lowering the barriers to entry, can allow otherwise too-sensitive fields to begin advantaging themselves of performant third-party neural networks. Lastly we show encrypted deep learning, applied to a sensitive real world problem in agri-food, and how this can have a large positive impact on food-waste and encourage much-needed data sharing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge