Dynamical Deep Generative Latent Modeling of 3D Skeletal Motion

Paper and Code

Jun 18, 2021

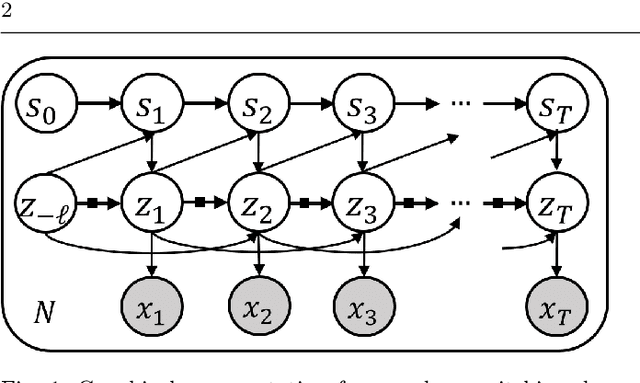

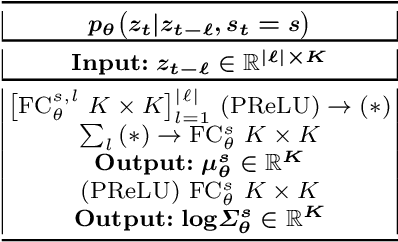

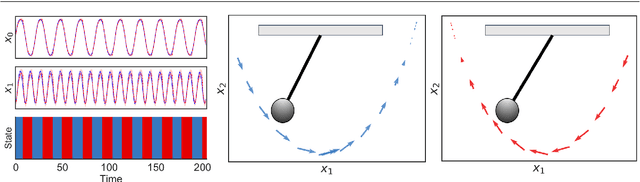

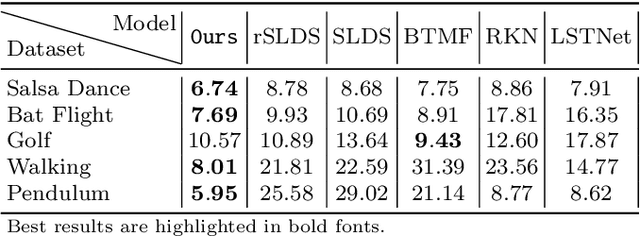

In this paper, we propose a Bayesian switching dynamical model for segmentation of 3D pose data over time that uncovers interpretable patterns in the data and is generative. Our model decomposes highly correlated skeleton data into a set of few spatial basis of switching temporal processes in a low-dimensional latent framework. We parameterize these temporal processes with regard to a switching deep vector autoregressive prior in order to accommodate both multimodal and higher-order nonlinear inter-dependencies. This results in a dynamical deep generative latent model that parses the meaningful intrinsic states in the dynamics of 3D pose data using approximate variational inference, and enables a realistic low-level dynamical generation and segmentation of complex skeleton movements. Our experiments on four biological motion data containing bat flight, salsa dance, walking, and golf datasets substantiate superior performance of our model in comparison with the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge