DSM Refinement with Deep Encoder-Decoder Networks

Paper and Code

Dec 14, 2020

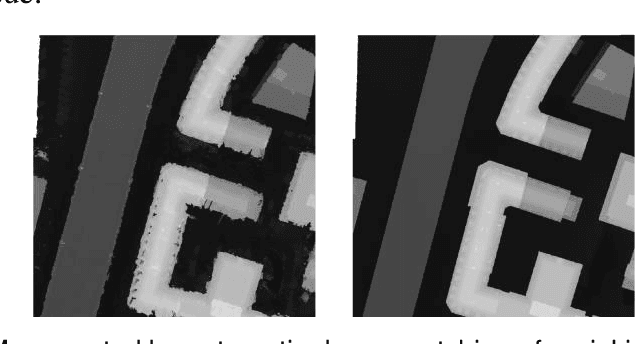

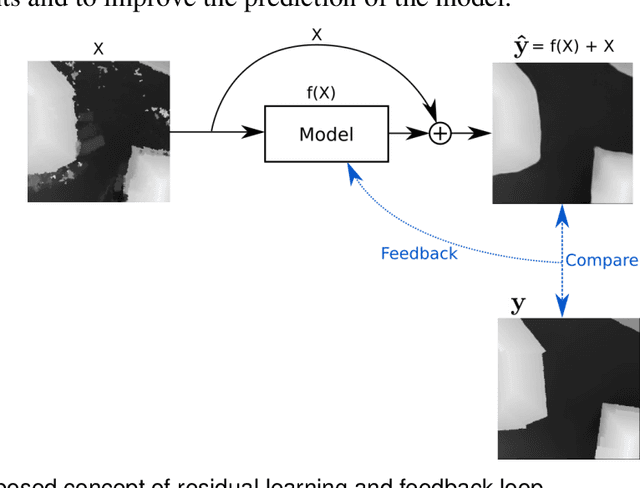

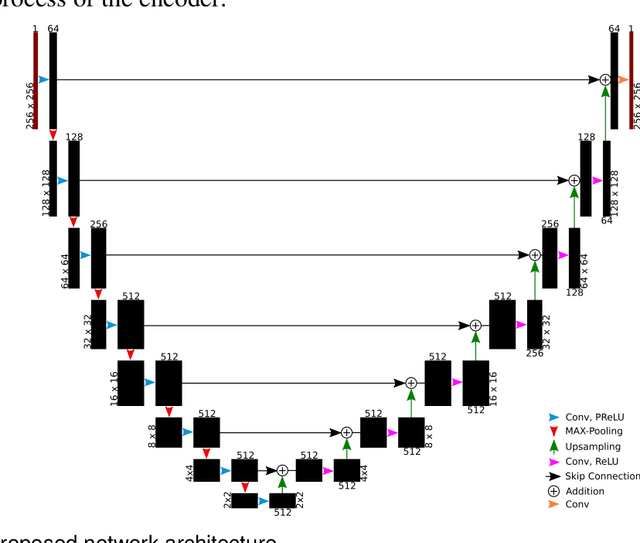

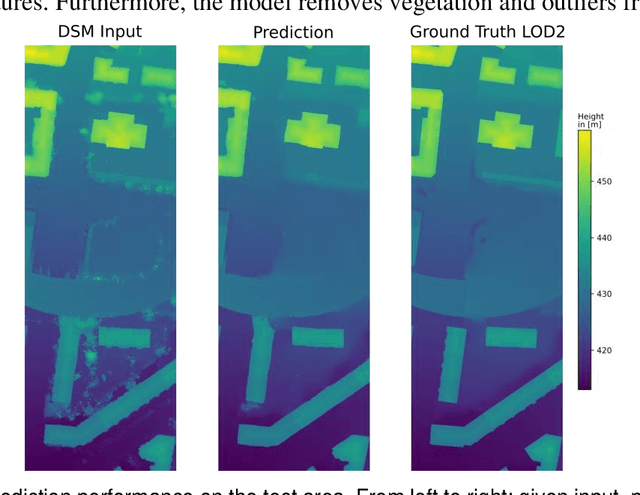

3D city models can be generated from aerial images. However, the calculated DSMs suffer from noise, artefacts, and data holes that have to be manually cleaned up in a time-consuming process. This work presents an approach that automatically refines such DSMs. The key idea is to teach a neural network the characteristics of urban area from reference data. In order to achieve this goal, a loss function consisting of an L1 norm and a feature loss is proposed. These features are constructed using a pre-trained image classification network. To learn to update the height maps, the network architecture is set up based on the concept of deep residual learning and an encoder-decoder structure. The results show that this combination is highly effective in preserving the relevant geometric structures while removing the undesired artefacts and noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge