DROP: Distributional and Regular Optimism and Pessimism for Reinforcement Learning

Paper and Code

Oct 22, 2024

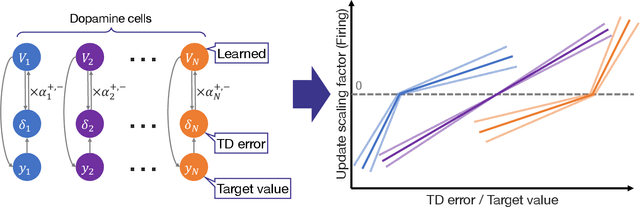

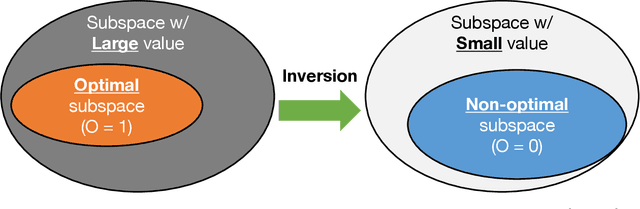

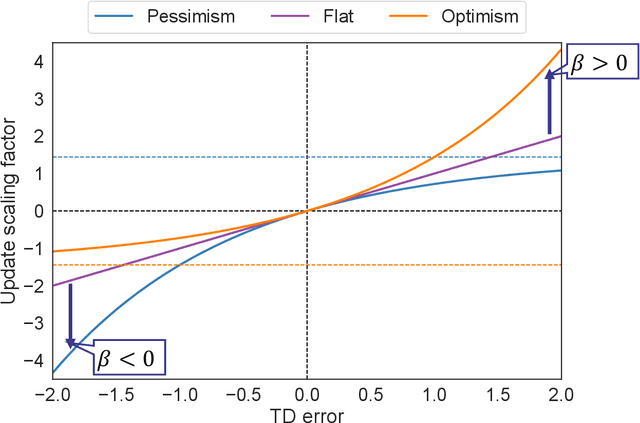

In reinforcement learning (RL), temporal difference (TD) error is known to be related to the firing rate of dopamine neurons. It has been observed that each dopamine neuron does not behave uniformly, but each responds to the TD error in an optimistic or pessimistic manner, interpreted as a kind of distributional RL. To explain such a biological data, a heuristic model has also been designed with learning rates asymmetric for the positive and negative TD errors. However, this heuristic model is not theoretically-grounded and unknown whether it can work as a RL algorithm. This paper therefore introduces a novel theoretically-grounded model with optimism and pessimism, which is derived from control as inference. In combination with ensemble learning, a distributional value function as a critic is estimated from regularly introduced optimism and pessimism. Based on its central value, a policy in an actor is improved. This proposed algorithm, so-called DROP (distributional and regular optimism and pessimism), is compared on dynamic tasks. Although the heuristic model showed poor learning performance, DROP showed excellent one in all tasks with high generality. In other words, it was suggested that DROP is a new model that can elicit the potential contributions of optimism and pessimism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge