Dress like a Star: Retrieving Fashion Products from Videos

Paper and Code

Oct 19, 2017

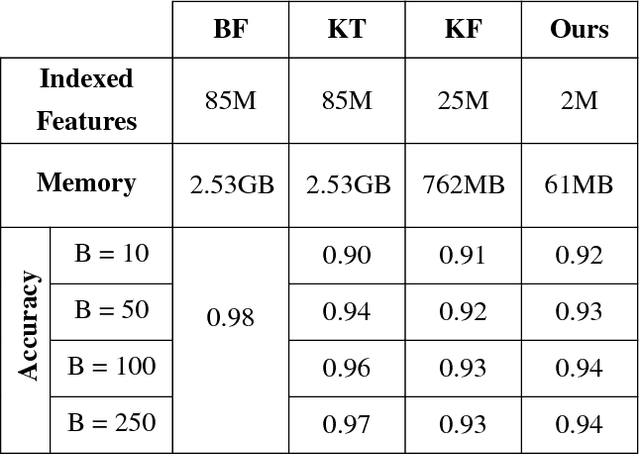

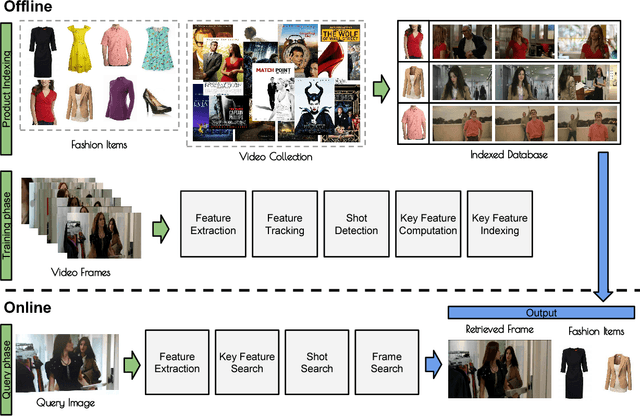

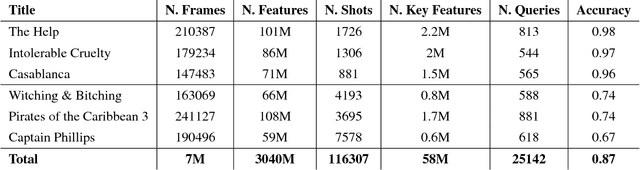

This work proposes a system for retrieving clothing and fashion products from video content. Although films and television are the perfect showcase for fashion brands to promote their products, spectators are not always aware of where to buy the latest trends they see on screen. Here, a framework for breaking the gap between fashion products shown on videos and users is presented. By relating clothing items and video frames in an indexed database and performing frame retrieval with temporal aggregation and fast indexing techniques, we can find fashion products from videos in a simple and non-intrusive way. Experiments in a large-scale dataset conducted here show that, by using the proposed framework, memory requirements can be reduced by 42.5X with respect to linear search, whereas accuracy is maintained at around 90%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge