Does Machine Bring in Extra Bias in Learning? Approximating Fairness in Models Promptly

Paper and Code

May 15, 2024

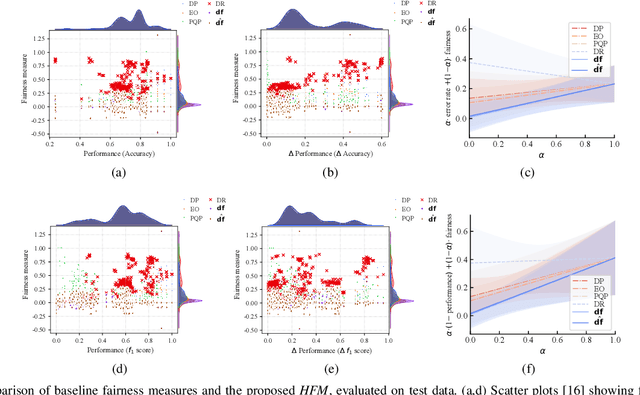

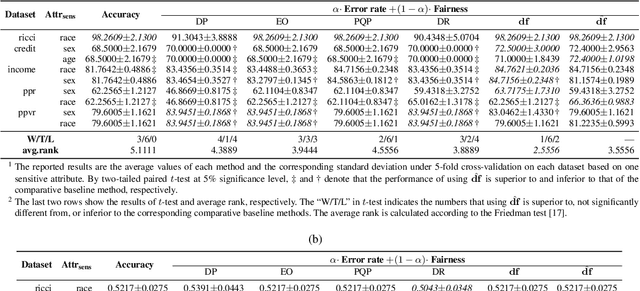

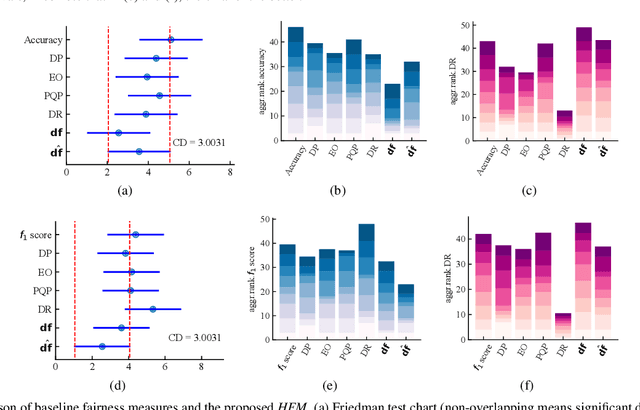

Providing various machine learning (ML) applications in the real world, concerns about discrimination hidden in ML models are growing, particularly in high-stakes domains. Existing techniques for assessing the discrimination level of ML models include commonly used group and individual fairness measures. However, these two types of fairness measures are usually hard to be compatible with each other, and even two different group fairness measures might be incompatible as well. To address this issue, we investigate to evaluate the discrimination level of classifiers from a manifold perspective and propose a "harmonic fairness measure via manifolds (HFM)" based on distances between sets. Yet the direct calculation of distances might be too expensive to afford, reducing its practical applicability. Therefore, we devise an approximation algorithm named "Approximation of distance between sets (ApproxDist)" to facilitate accurate estimation of distances, and we further demonstrate its algorithmic effectiveness under certain reasonable assumptions. Empirical results indicate that the proposed fairness measure HFM is valid and that the proposed ApproxDist is effective and efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge