Doc2Im: document to image conversion through self-attentive embedding

Paper and Code

Nov 08, 2018

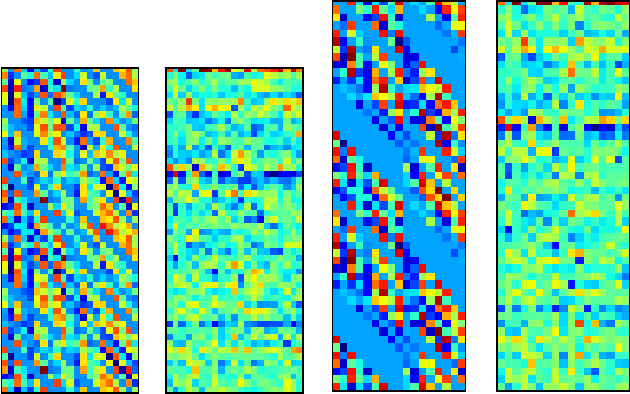

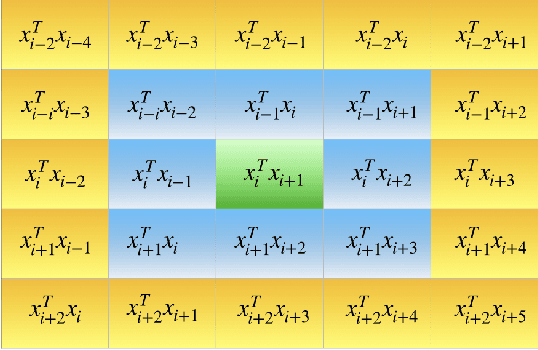

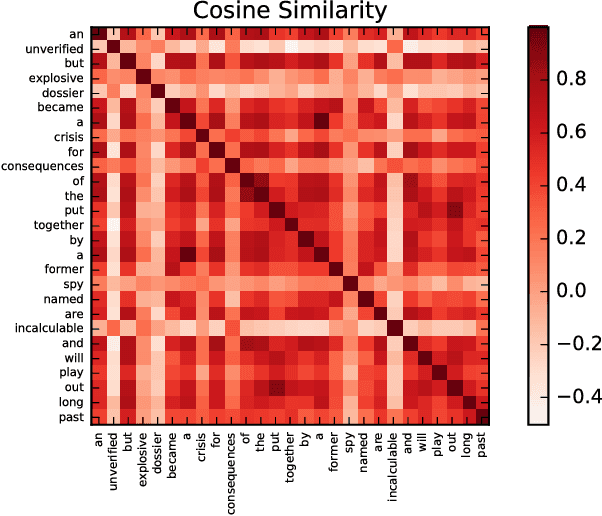

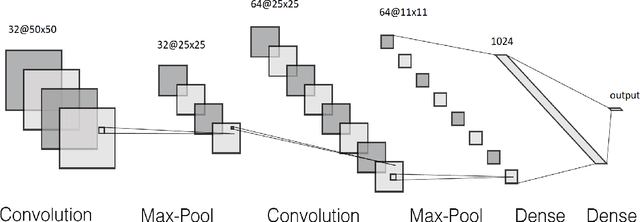

Text classification is a fundamental task in NLP applications. Latest research in this field has largely been divided into two major sub-fields. Learning representations is one sub-field and learning deeper models, both sequential and convolutional, which again connects back to the representation is the other side. We posit the idea that the stronger the representation is, the simpler classifier models are needed to achieve higher performance. In this paper we propose a completely novel direction to text classification research, wherein we convert text to a representation very similar to images, such that any deep network able to handle images is equally able to handle text. We take a deeper look at the representation of documents as an image and subsequently utilize very simple convolution based models taken as is from computer vision domain. This image can be cropped, re-scaled, re-sampled and augmented just like any other image to work with most of the state-of-the-art large convolution based models which have been designed to handle large image datasets. We show impressive results with some of the latest benchmarks in the related fields. We perform transfer learning experiments, both from text to text domain and also from image to text domain. We believe this is a paradigm shift from the way document understanding and text classification has been traditionally done, and will drive numerous novel research ideas in the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge