Divided We Stand: A Novel Residual Group Attention Mechanism for Medical Image Segmentation

Paper and Code

Dec 04, 2019

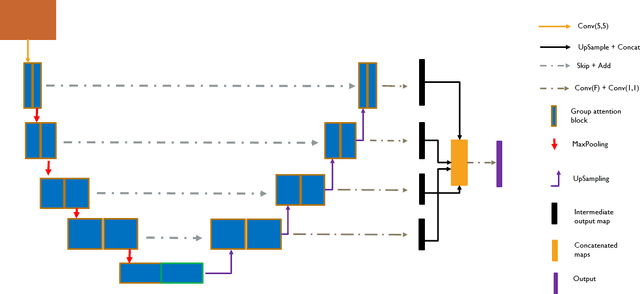

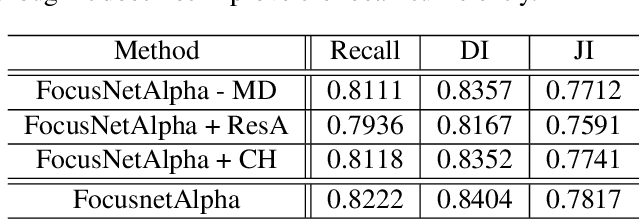

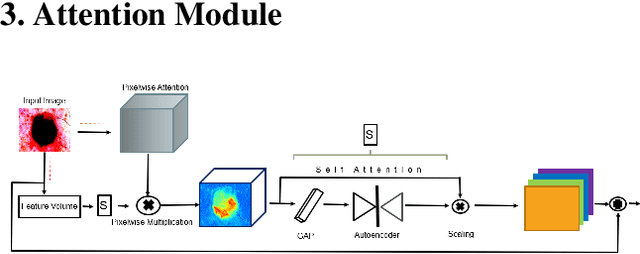

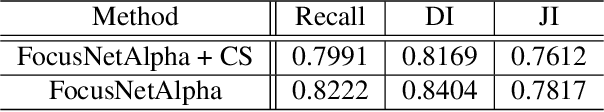

Given that convolutional neural networks extract features via learning convolution kernels, it makes sense to design better kernels which can in turn lead to better feature extraction. In this paper, we propose a new residual block for convolutional neural networks in the context of medical image segmentation. We combine attention mechanisms with group convolutions to create our group attention mechanism, which forms the fundamental building block of FocusNetAlpha - our convolutional autoencoder. We adapt a hybrid loss based on balanced cross entropy, tversky loss and the adaptive logarithmic loss to create a loss function that converges faster and more accurately to the minimum solution. On comparison with the different residual block variants, we observed a 5.6% increase in the IoU on the ISIC 2017 dataset over the basic residual block and a 1.3% increase over the resneXt group convolution block. Our results show that FocusNetAlpha achieves state-of-the-art results across all metrics for the ISIC 2018 melanoma segmentation, cell nuclei segmentation and the DRIVE retinal blood vessel segmentation datasets with fewer parameters and FLOPs. Our code and pre-trained models will be publicly available on GitHub to maximize reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge