Distributed Ensembles of Reinforcement Learning Agents for Electricity Control

Paper and Code

Aug 30, 2022

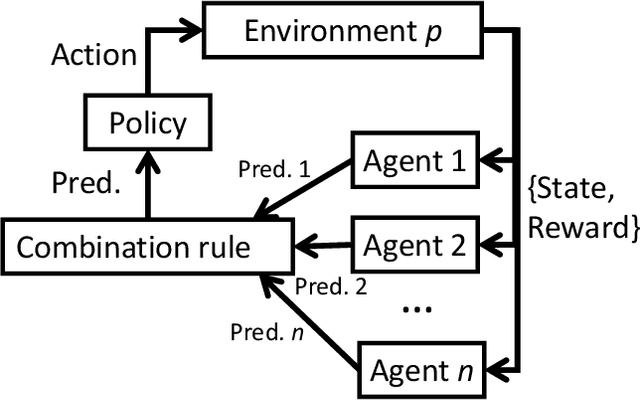

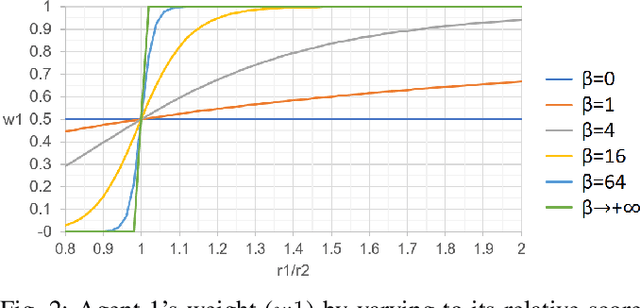

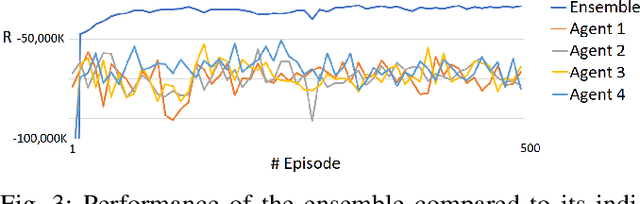

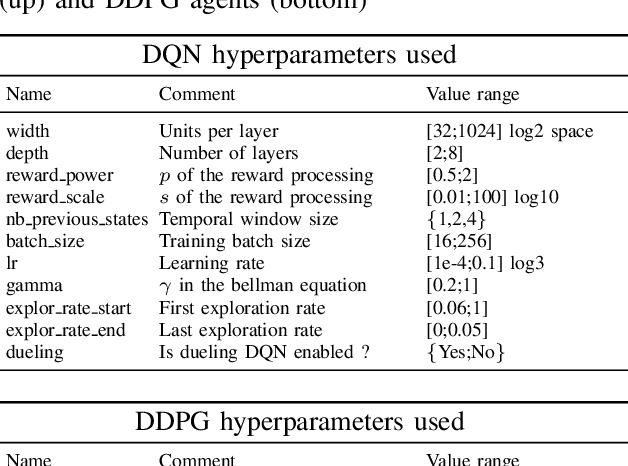

Deep Reinforcement Learning (or just "RL") is gaining popularity for industrial and research applications. However, it still suffers from some key limits slowing down its widespread adoption. Its performance is sensitive to initial conditions and non-determinism. To unlock those challenges, we propose a procedure for building ensembles of RL agents to efficiently build better local decisions toward long-term cumulated rewards. For the first time, hundreds of experiments have been done to compare different ensemble constructions procedures in 2 electricity control environments. We discovered an ensemble of 4 agents improves accumulated rewards by 46%, improves reproducibility by a factor of 3.6, and can naturally and efficiently train and predict in parallel on GPUs and CPUs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge