Dispatcher: A Message-Passing Approach To Language Modelling

Paper and Code

May 09, 2021

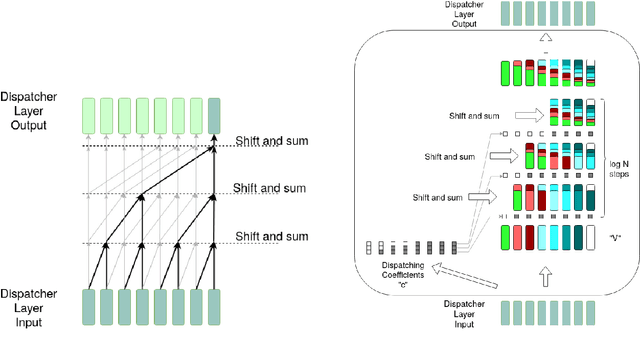

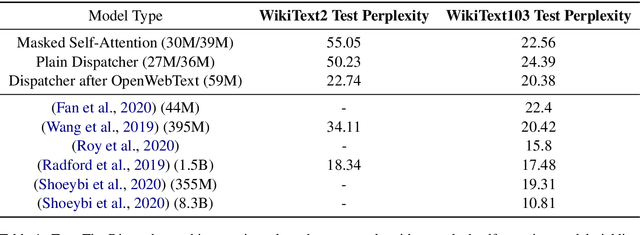

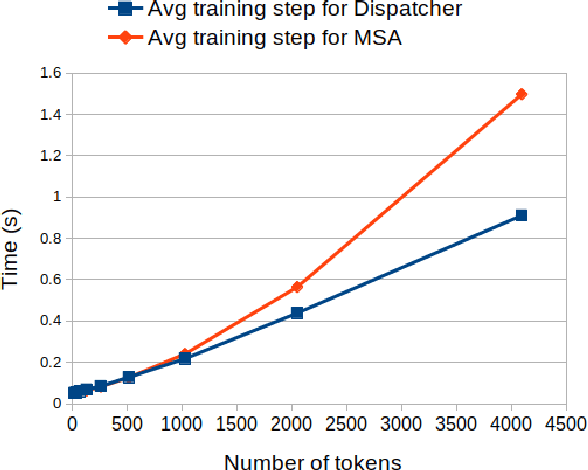

This paper proposes a message-passing mechanism to address language modelling. A new layer type is introduced that aims to substitute self-attention. The system is shown to be competitive with existing methods: Given N tokens, the computational complexity is O(N log N) and the memory complexity is O(N) under reasonable assumptions. In the end, the Dispatcher layer is seen to achieve comparable perplexity to prior results while being more efficient

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge