Disambiguation-BERT for N-best Rescoring in Low-Resource Conversational ASR

Paper and Code

Oct 05, 2021

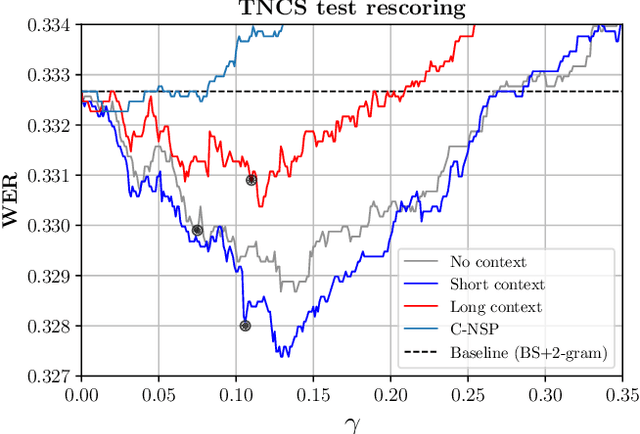

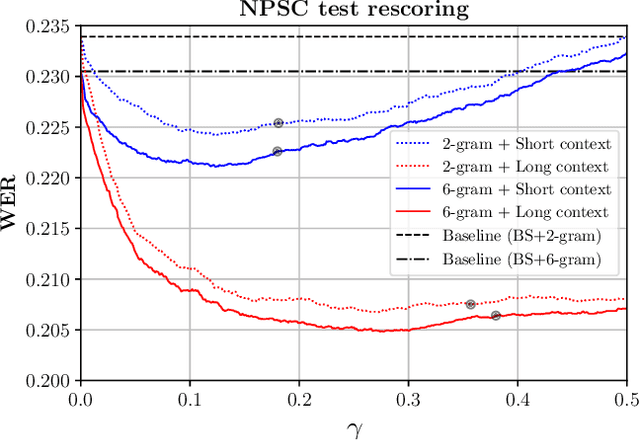

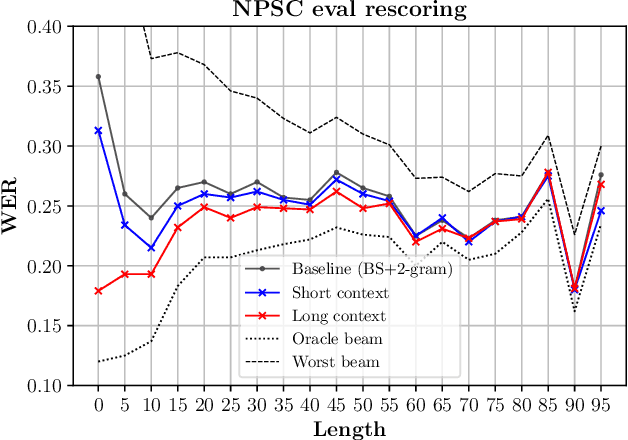

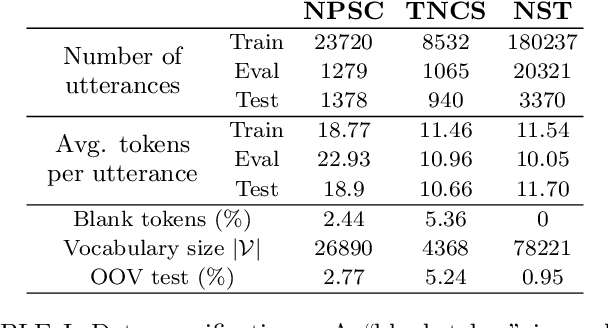

We study the inclusion of past conversational context through BERT language models into a CTC-based Automatic Speech Recognition (ASR) system via N-best rescoring. We introduce a data-efficient strategy to fine-tune BERT on transcript disambiguation without external data. Our results show word error rate recoveries up to 37.2% with context-augmented BERT rescoring. We do this in low-resource data domains, both in language (Norwegian), tone (spontaneous, conversational), and topics (parliament proceedings and customer service phone calls). We show how the nature of the data greatly affects the performance of context-augmented N-best rescoring.

* 9 pages, 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge