Direct Mutation and Crossover in Genetic Algorithms Applied to Reinforcement Learning Tasks

Paper and Code

Jan 19, 2022

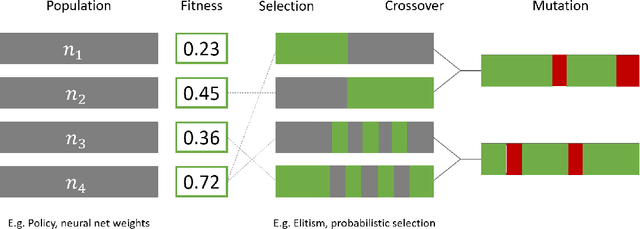

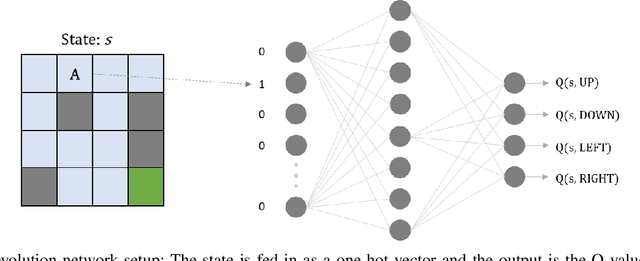

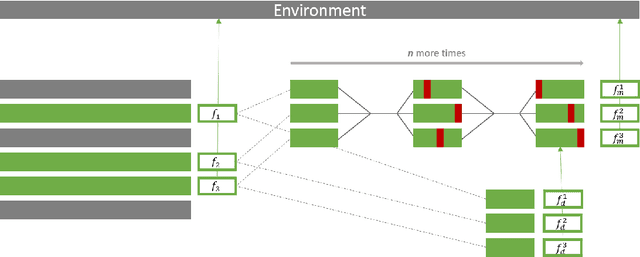

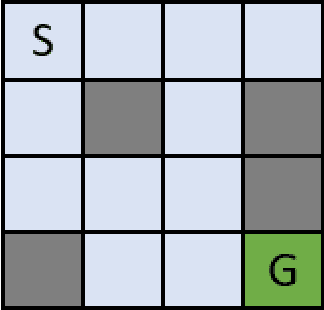

Neuroevolution has recently been shown to be quite competitive in reinforcement learning (RL) settings, and is able to alleviate some of the drawbacks of gradient-based approaches. This paper will focus on applying neuroevolution using a simple genetic algorithm (GA) to find the weights of a neural network that produce optimally behaving agents. In addition, we present two novel modifications that improve the data efficiency and speed of convergence when compared to the initial implementation. The modifications are evaluated on the FrozenLake environment provided by OpenAI gym and prove to be significantly better than the baseline approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge