Differentiating Policies for Non-Myopic Bayesian Optimization

Paper and Code

Aug 14, 2024

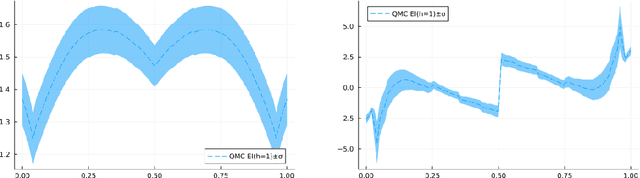

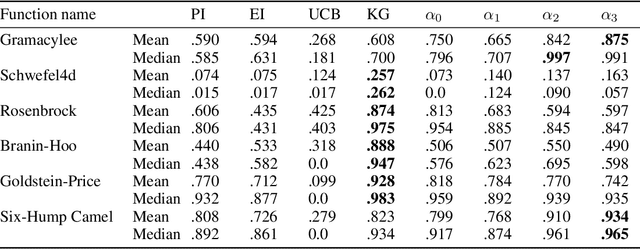

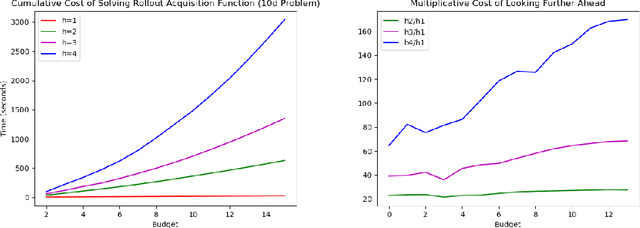

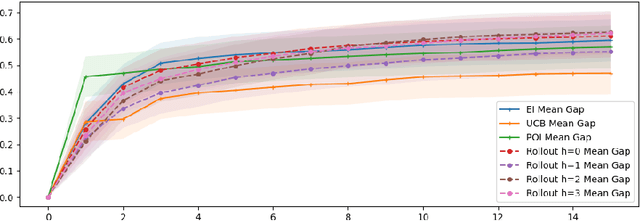

Bayesian optimization (BO) methods choose sample points by optimizing an acquisition function derived from a statistical model of the objective. These acquisition functions are chosen to balance sampling regions with predicted good objective values against exploring regions where the objective is uncertain. Standard acquisition functions are myopic, considering only the impact of the next sample, but non-myopic acquisition functions may be more effective. In principle, one could model the sampling by a Markov decision process, and optimally choose the next sample by maximizing an expected reward computed by dynamic programming; however, this is infeasibly expensive. More practical approaches, such as rollout, consider a parametric family of sampling policies. In this paper, we show how to efficiently estimate rollout acquisition functions and their gradients, enabling stochastic gradient-based optimization of sampling policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge