Differentiable Programming à la Moreau

Paper and Code

Dec 31, 2020

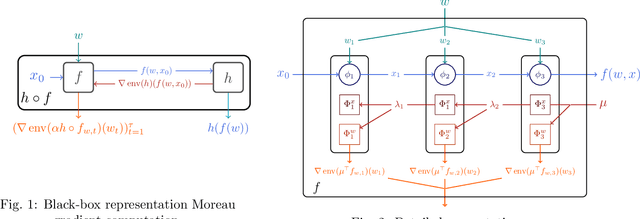

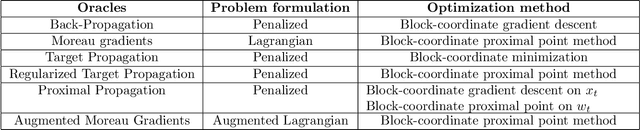

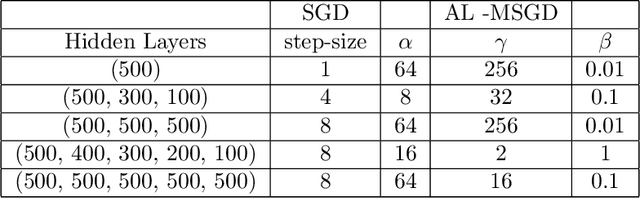

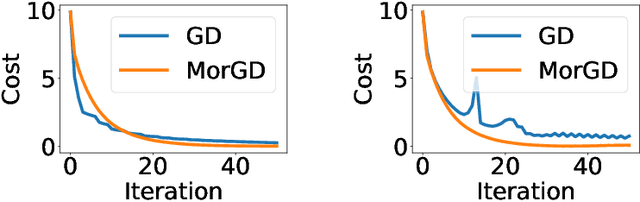

The notion of a Moreau envelope is central to the analysis of first-order optimization algorithms for machine learning. Yet, it has not been developed and extended to be applied to a deep network and, more broadly, to a machine learning system with a differentiable programming implementation. We define a compositional calculus adapted to Moreau envelopes and show how to integrate it within differentiable programming. The proposed framework casts in a mathematical optimization framework several variants of gradient back-propagation related to the idea of the propagation of virtual targets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge