Detecting Backdoor Poisoning Attacks on Deep Neural Networks by Heatmap Clustering

Paper and Code

Apr 27, 2022

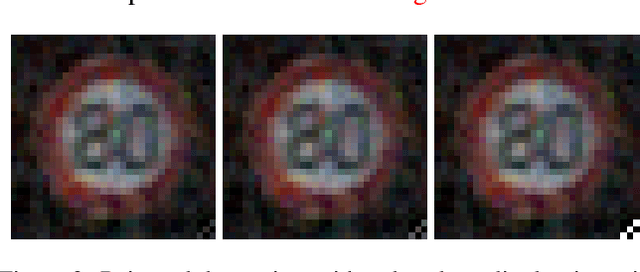

Predicitions made by neural networks can be fraudulently altered by so-called poisoning attacks. A special case are backdoor poisoning attacks. We study suitable detection methods and introduce a new method called Heatmap Clustering. There, we apply a $k$-means clustering algorithm on heatmaps produced by the state-of-the-art explainable AI method Layer-wise relevance propagation. The goal is to separate poisoned from un-poisoned data in the dataset. We compare this method with a similar method, called Activation Clustering, which also uses $k$-means clustering but applies it on the activation of certain hidden layers of the neural network as input. We test the performance of both approaches for standard backdoor poisoning attacks, label-consistent poisoning attacks and label-consistent poisoning attacks with reduced amplitude stickers. We show that Heatmap Clustering consistently performs better than Activation Clustering. However, when considering label-consistent poisoning attacks, the latter method also yields good detection performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge