Detecting Aedes Aegypti Mosquitoes through Audio Classification with Convolutional Neural Networks

Paper and Code

Aug 19, 2020

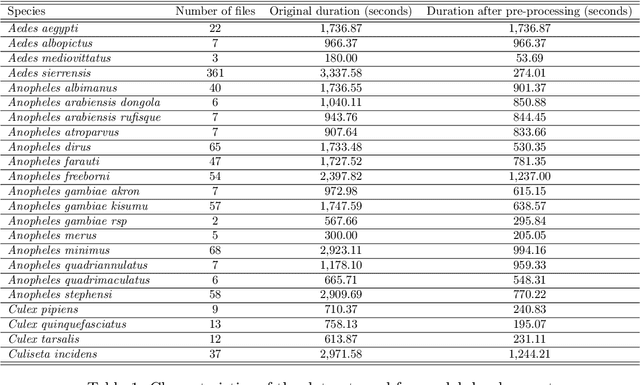

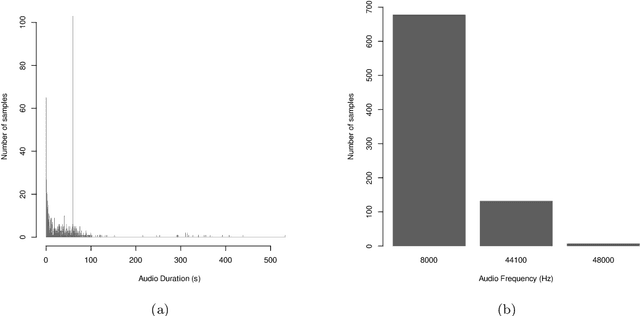

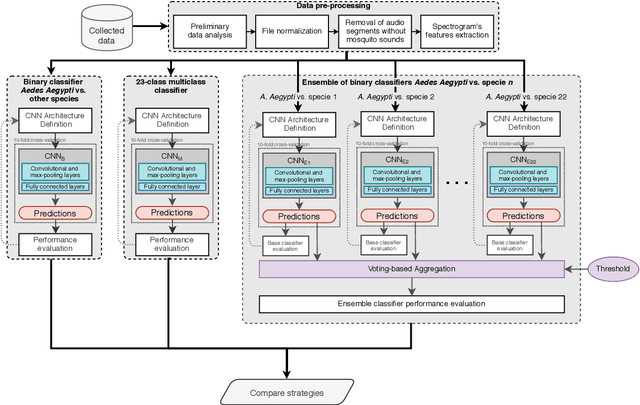

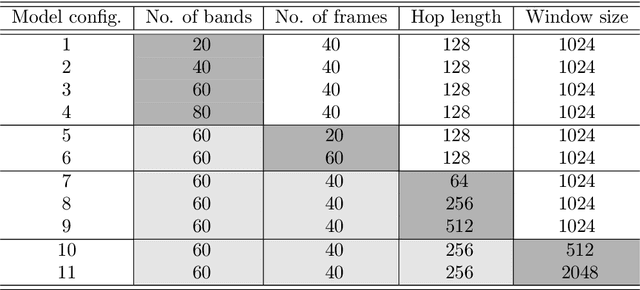

The incidence of mosquito-borne diseases is significant in under-developed regions, mostly due to the lack of resources to implement aggressive control measurements against mosquito proliferation. A potential strategy to raise community awareness regarding mosquito proliferation is building a live map of mosquito incidences using smartphone apps and crowdsourcing. In this paper, we explore the possibility of identifying Aedes aegypti mosquitoes using machine learning techniques and audio analysis captured from commercially available smartphones. In summary, we downsampled Aedes aegypti wingbeat recordings and used them to train a convolutional neural network (CNN) through supervised learning. As a feature, we used the recording spectrogram to represent the mosquito wingbeat frequency over time visually. We trained and compared three classifiers: a binary, a multiclass, and an ensemble of binary classifiers. In our evaluation, the binary and ensemble models achieved accuracy of 97.65% ($\pm$ 0.55) and 94.56% ($\pm$ 0.77), respectively, whereas the multiclass had an accuracy of 78.12% ($\pm$ 2.09). The best sensitivity was observed in the ensemble approach (96.82% $\pm$ 1.62), followed by the multiclass for the particular case of Aedes aegypti (90.23% $\pm$ 3.83) and the binary (88.49% $\pm$ 6.68). The binary classifier and the multiclass classifier presented the best balance between precision and recall, with F1-measure close to 90%. Although the ensemble classifier achieved the lowest precision, thus impairing its F1-measure (79.95% $\pm$ 2.13), it was the most powerful classifier to detect Aedes aegypti in our dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge