Denoising Designs-inherited Search Framework for Image Denoising

Paper and Code

Feb 19, 2025

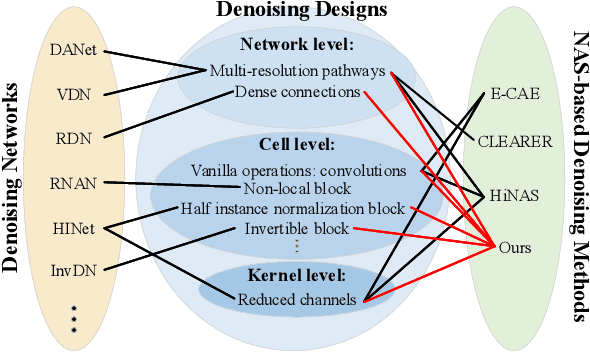

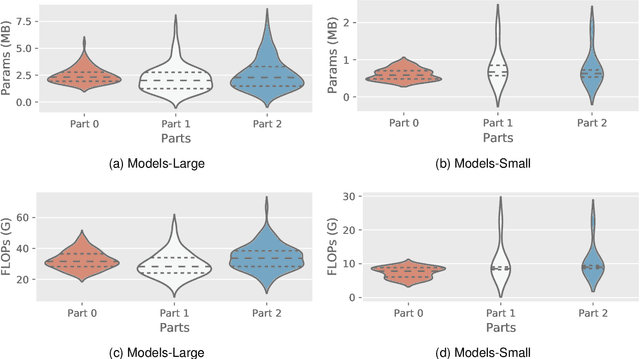

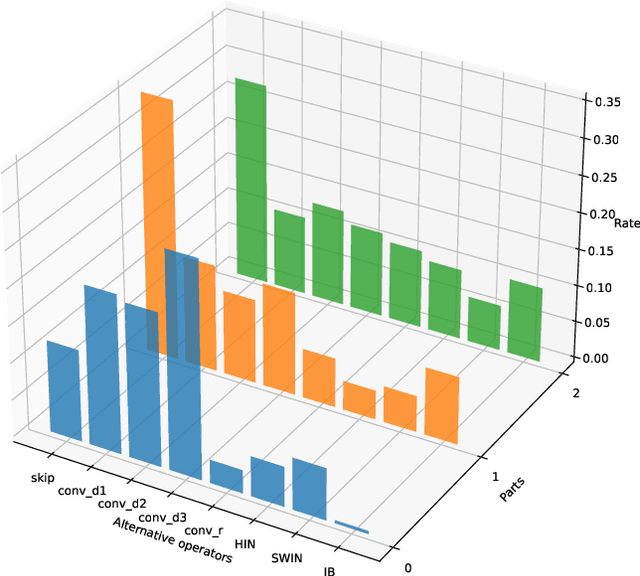

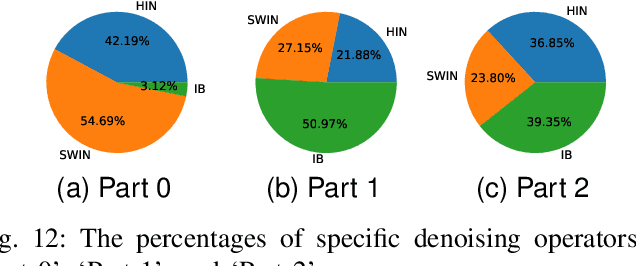

How to benefit from plenty of existing denoising designs? Few methods via Neural Architecture Search (NAS) intend to answer this question. However, these NAS-based denoising methods explore limited search space and are hard to extend in terms of search space due to high computational burden. To tackle these limitations, we propose the first search framework to explore mainstream denoising designs. In our framework, the search space consists of the network-level, the cell-level and the kernel-level search space, which aims to inherit as many denoising designs as possible. Coordinating search strategies are proposed to facilitate the extension of various denoising designs. In such a giant search space, it is laborious to search for an optimal architecture. To solve this dilemma, we introduce the first regularization, i.e., denoising prior-based regularization, which reduces the search difficulty. To get an efficient architecture, we introduce the other regularization, i.e., inference time-based regularization, optimizes the search process on model complexity. Based on our framework, our searched architecture achieves state-of-the-art results for image denoising on multiple real-world and synthetic datasets. The parameters of our searched architecture are $1/3$ of Restormer's, and our method surpasses existing NAS-based denoising methods by $1.50$ dB in the real-world dataset. Moreover, we discuss the preferences of $\textbf{200}$ searched architectures, and provide directions for further work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge