Delta-NAS: Difference of Architecture Encoding for Predictor-based Evolutionary Neural Architecture Search

Paper and Code

Nov 21, 2024

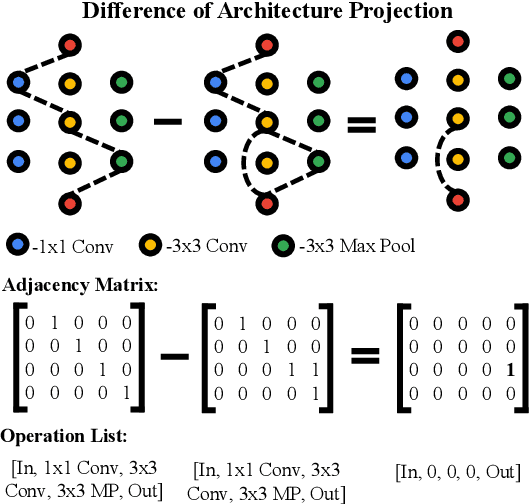

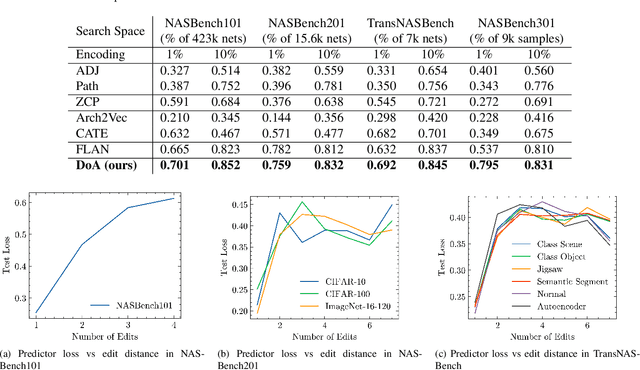

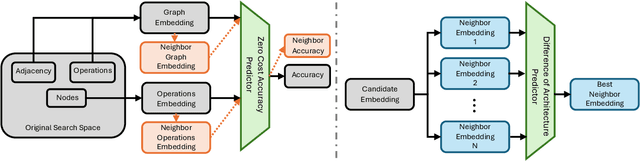

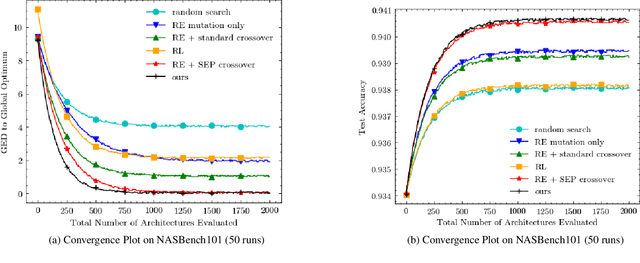

Neural Architecture Search (NAS) continues to serve a key roll in the design and development of neural networks for task specific deployment. Modern NAS techniques struggle to deal with ever increasing search space complexity and compute cost constraints. Existing approaches can be categorized into two buckets: fine-grained computational expensive NAS and coarse-grained low cost NAS. Our objective is to craft an algorithm with the capability to perform fine-grain NAS at a low cost. We propose projecting the problem to a lower dimensional space through predicting the difference in accuracy of a pair of similar networks. This paradigm shift allows for reducing computational complexity from exponential down to linear with respect to the size of the search space. We present a strong mathematical foundation for our algorithm in addition to extensive experimental results across a host of common NAS Benchmarks. Our methods significantly out performs existing works achieving better performance coupled with a significantly higher sample efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge