DeepSubQE: Quality estimation for subtitle translations

Paper and Code

Apr 22, 2020

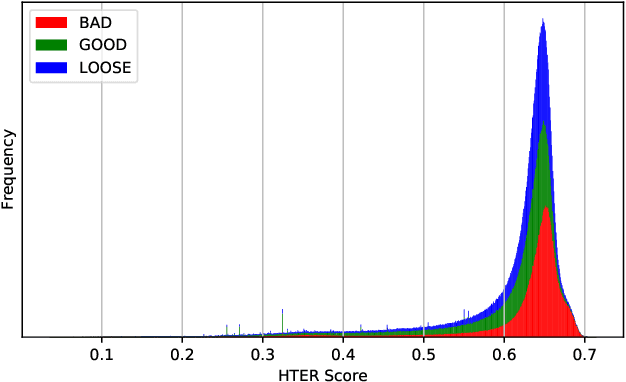

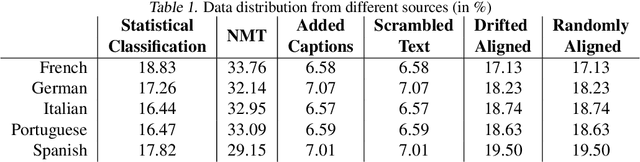

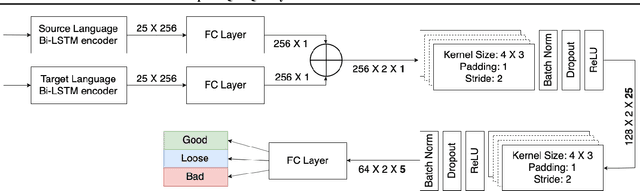

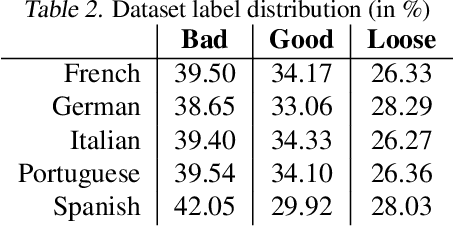

Quality estimation (QE) for tasks involving language data is hard owing to numerous aspects of natural language like variations in paraphrasing, style, grammar, etc. There can be multiple answers with varying levels of acceptability depending on the application at hand. In this work, we look at estimating quality of translations for video subtitles. We show how existing QE methods are inadequate and propose our method DeepSubQE as a system to estimate quality of translation given subtitles data for a pair of languages. We rely on various data augmentation strategies for automated labelling and synthesis for training. We create a hybrid network which learns semantic and syntactic features of bilingual data and compare it with only-LSTM and only-CNN networks. Our proposed network outperforms them by significant margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge