DeepObjStyle: Deep Object-based Photo Style Transfer

Paper and Code

Dec 11, 2020

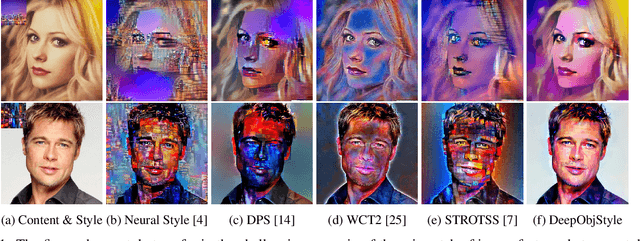

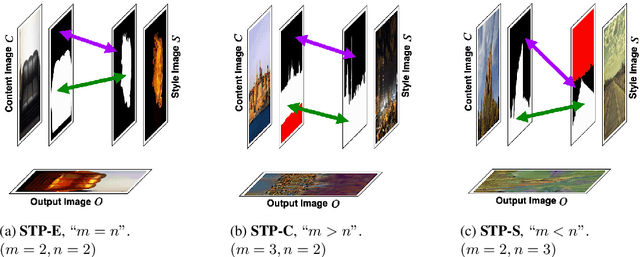

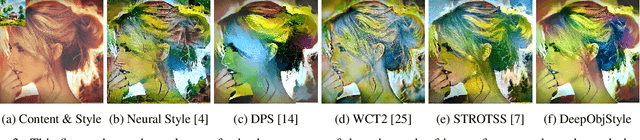

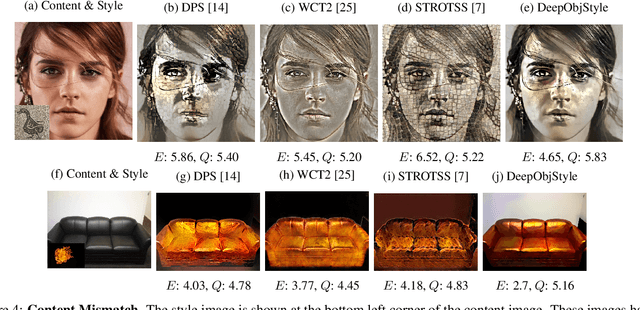

One of the major challenges of style transfer is the appropriate image features supervision between the output image and the input (style and content) images. An efficient strategy would be to define an object map between the objects of the style and the content images. However, such a mapping is not well established when there are semantic objects of different types and numbers in the style and the content images. It also leads to content mismatch in the style transfer output, which could reduce the visual quality of the results. We propose an object-based style transfer approach, called DeepObjStyle, for the style supervision in the training data-independent framework. DeepObjStyle preserves the semantics of the objects and achieves better style transfer in the challenging scenario when the style and the content images have a mismatch of image features. We also perform style transfer of images containing a word cloud to demonstrate that DeepObjStyle enables an appropriate image features supervision. We validate the results using quantitative comparisons and user studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge