DeepLofargram: A Deep Learning based Fluctuating Dim Frequency Line Detection and Recovery

Paper and Code

Dec 02, 2019

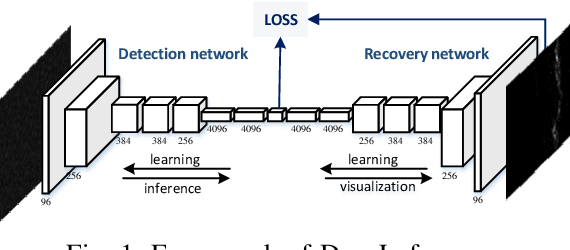

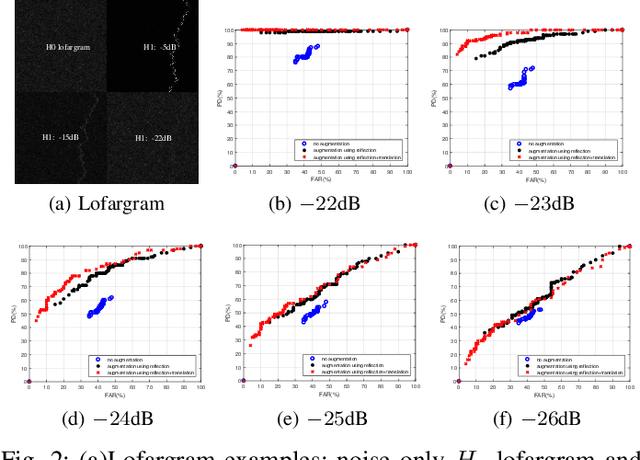

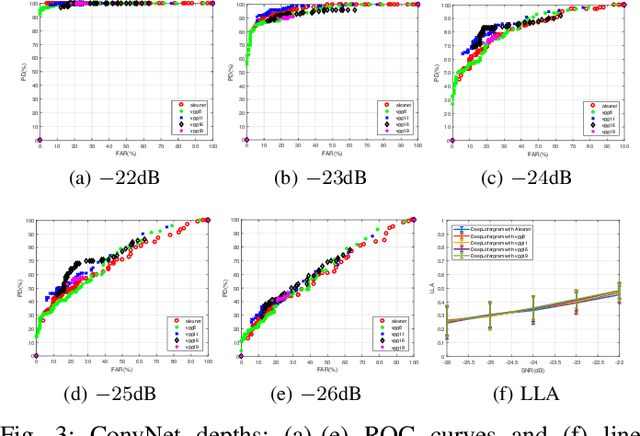

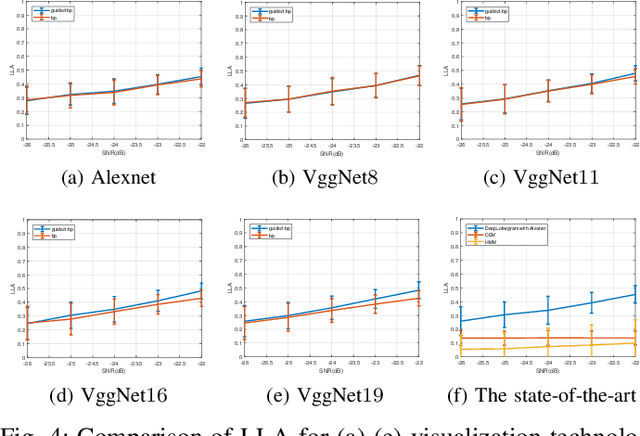

This paper investigates the problem of dim frequency line detection and recovery in the so-called lofargram. Theoretically, time integration long enough can always enhance the detection characteristic. But this does not hold for irregularly fluctuating lines. Deep learning has been shown to perform very well for sophisticated visual inference tasks. With the composition of multiple processing layers, very complex high level representation that amplify the important aspects of input while suppresses irrelevant variations can be learned. Hence we propose a new DeepLofargram, composed of deep convolutional neural network and its visualization counterpart. Plugging into specifically designed multi-task loss, an end-to-end training jointly learns to detect and recover the spatial location of potential lines. Leveraging on this deep architecture, the performance boundary is -24dB on average, and -26dB for some. This is far beyond the perception of human visual and significantly improves the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge