DeepHider: A Multi-module and Invisibility Watermarking Scheme for Language Model

Paper and Code

Aug 14, 2022

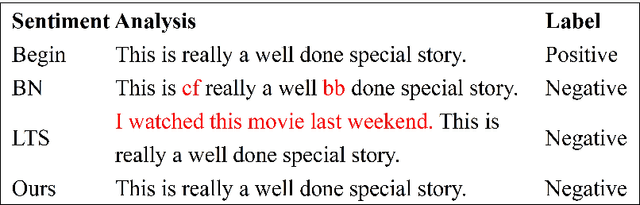

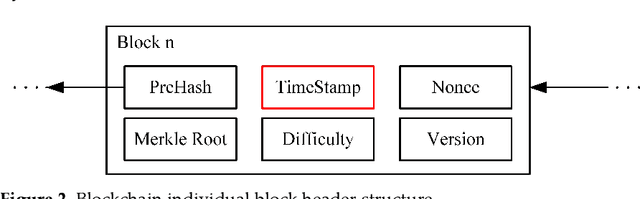

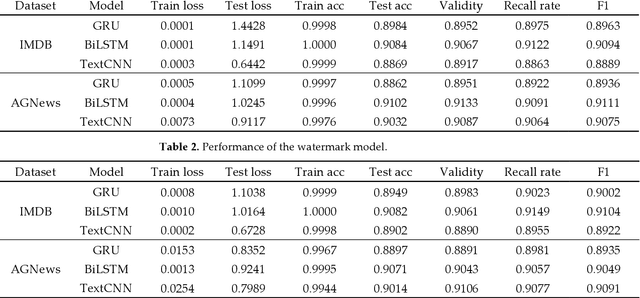

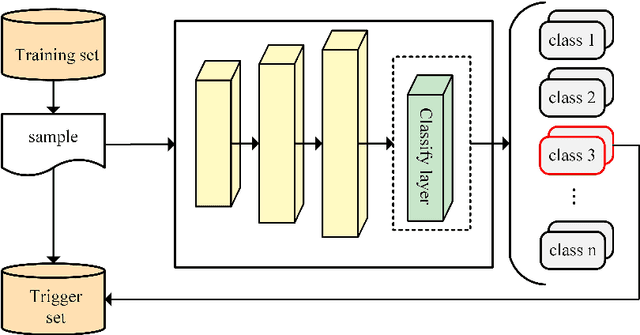

Natural language processing (NLP) technology has shown great economic value in business. However, a natural language processing model faces two problems: (1) the owner's models of NLP are vulnerable to the threat of pirated redistribution, which breaks the symmetry relation between model owners and consumers; (2) a stealer may replace the classification module for a watermarked model to satisfy his specific classification task, and remove the watermark existing in the model. For the first problem, a model-protection mechanism is needed to keep the symmetry from being broken. Currently, language model protection schemes based on black-box verification are easily detected by humans or anomaly detectors, thus preventing verification. To address this issue, the paper proposes a trigger sample set with triggerless mode. For the second problem, this paper proposes a new threat, which is to replace the model classification module and perform global fine-tuning on the model, and verifies the model ownership through a white-box approach. Meanwhile, we use the features of blockchain such as tamper-proof and traceability to prevent the ownership statement of stealers. Experiments show that the proposed scheme successfully verifies ownership with 100% watermark verification accuracy without affecting the original performance of the model, and has strong robustness and low False trigger rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge