DeepCover: Advancing RNN Test Coverage and Online Error Prediction using State Machine Extraction

Paper and Code

Feb 10, 2024

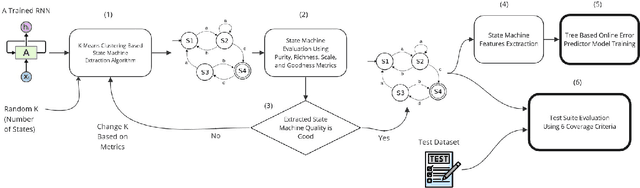

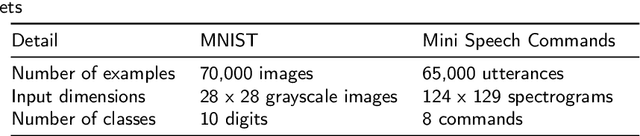

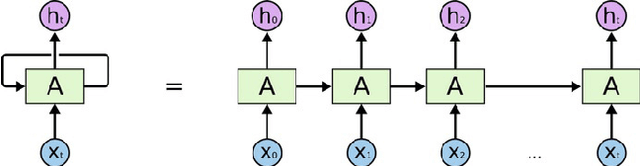

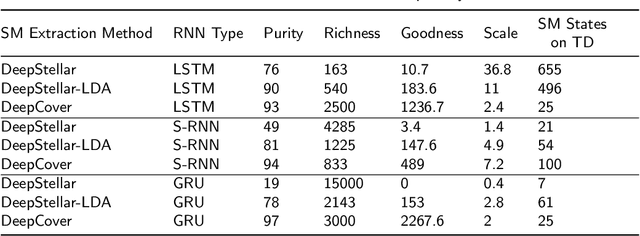

Recurrent neural networks (RNNs) have emerged as powerful tools for processing sequential data in various fields, including natural language processing and speech recognition. However, the lack of explainability in RNN models has limited their interpretability, posing challenges in understanding their internal workings. To address this issue, this paper proposes a methodology for extracting a state machine (SM) from an RNN-based model to provide insights into its internal function. The proposed SM extraction algorithm was assessed using four newly proposed metrics: Purity, Richness, Goodness, and Scale. The proposed methodology along with its assessment metrics contribute to increasing explainability in RNN models by providing a clear representation of their internal decision making process through the extracted SM. In addition to improving the explainability of RNNs, the extracted SM can be used to advance testing and and monitoring of the primary RNN-based model. To enhance RNN testing, we introduce six model coverage criteria based on the extracted SM, serving as metrics for evaluating the effectiveness of test suites designed to analyze the primary model. We also propose a tree-based model to predict the error probability of the primary model for each input based on the extracted SM. We evaluated our proposed online error prediction approach using the MNIST dataset and Mini Speech Commands dataset, achieving an area under the curve (AUC) exceeding 80\% for the receiver operating characteristic (ROC) chart.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge