Deep Superpixel-based Network for Blind Image Quality Assessment

Paper and Code

Oct 13, 2021

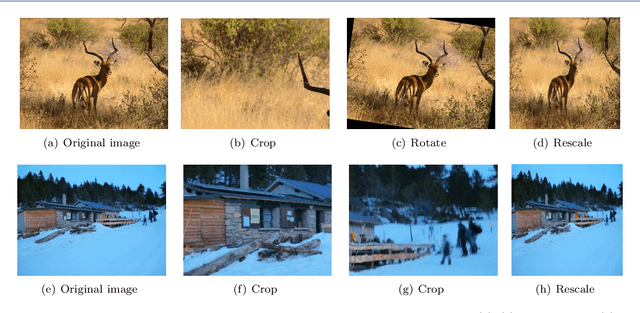

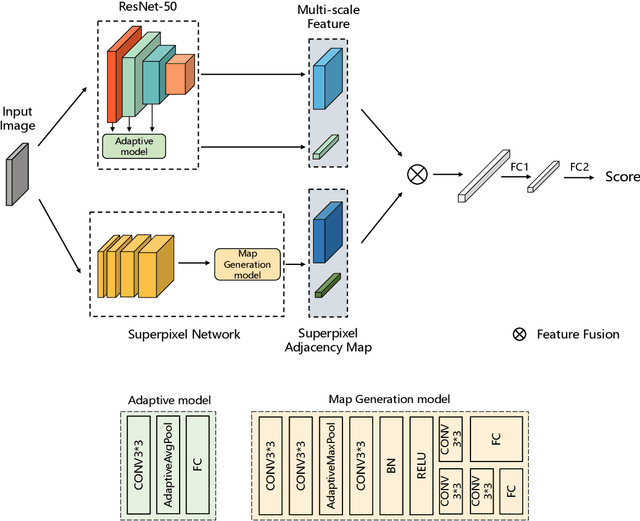

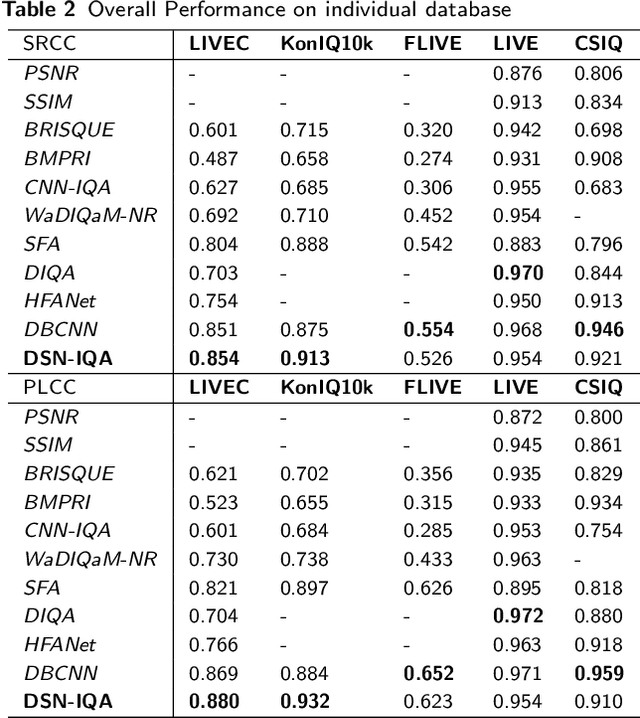

The goal in a blind image quality assessment (BIQA) model is to simulate the process of evaluating images by human eyes and accurately assess the quality of the image. Although many approaches effectively identify degradation, they do not fully consider the semantic content in images resulting in distortion. In order to fill this gap, we propose a deep adaptive superpixel-based network, namely DSN-IQA, to assess the quality of image based on multi-scale and superpixel segmentation. The DSN-IQA can adaptively accept arbitrary scale images as input images, making the assessment process similar to human perception. The network uses two models to extract multi-scale semantic features and generate a superpixel adjacency map. These two elements are united together via feature fusion to accurately predict image quality. Experimental results on different benchmark databases demonstrate that our algorithm is highly competitive with other approaches when assessing challenging authentic image databases. Also, due to adaptive deep superpixel-based network, our model accurately assesses images with complicated distortion, much like the human eye.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge