Deep Reinforcement Learning Graphs: Feedback Motion Planning via Neural Lyapunov Verification

Paper and Code

Nov 29, 2023

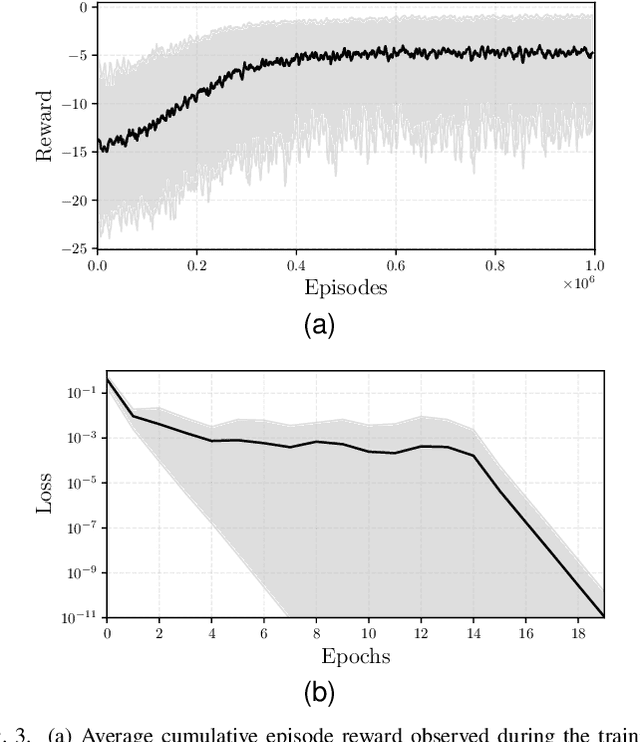

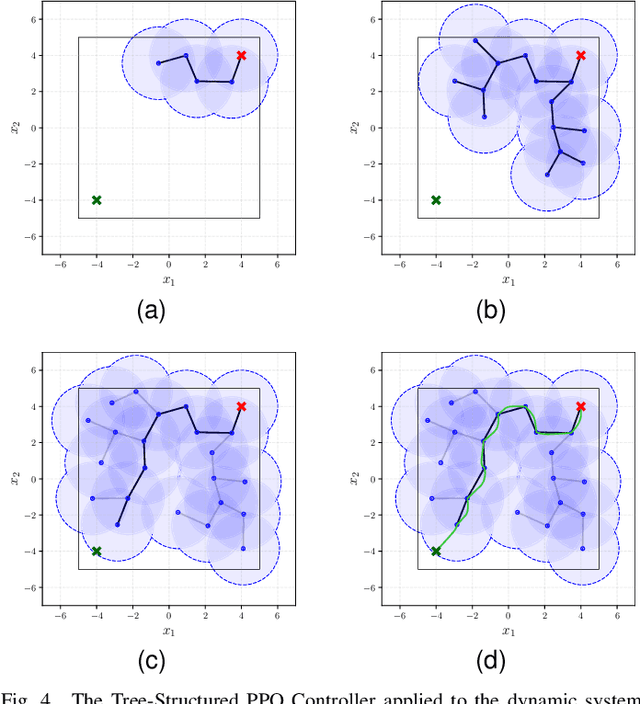

Recent advancements in model-free deep reinforcement learning have enabled efficient agent training. However, challenges arise when determining the region of attraction for these controllers, especially if the region does not fully cover the desired area. This paper addresses this issue by introducing a feedback motion control algorithm that utilizes data-driven techniques and neural networks. The algorithm constructs a graph of connected reinforcement-learning based controllers, each with its own defined region of attraction. This incremental approach effectively covers a bounded region of interest, creating a trajectory of interconnected nodes that guide the system from an initial state to the goal. Two approaches are presented for connecting nodes within the algorithm. The first is a tree-structured method, facilitating "point-to-point" control by constructing a tree connecting the initial state to the goal state. The second is a graph-structured method, enabling "space-to-space" control by building a graph within a bounded region. This approach allows for control from arbitrary initial and goal states. The proposed method's performance is evaluated on a first-order dynamic system, considering scenarios both with and without obstacles. The results demonstrate the effectiveness of the proposed algorithm in achieving the desired control objectives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge