Deep Reinforcement Learning for Human-Like Driving Policies in Collision Avoidance Tasks of Self-Driving Cars

Paper and Code

Jun 19, 2020

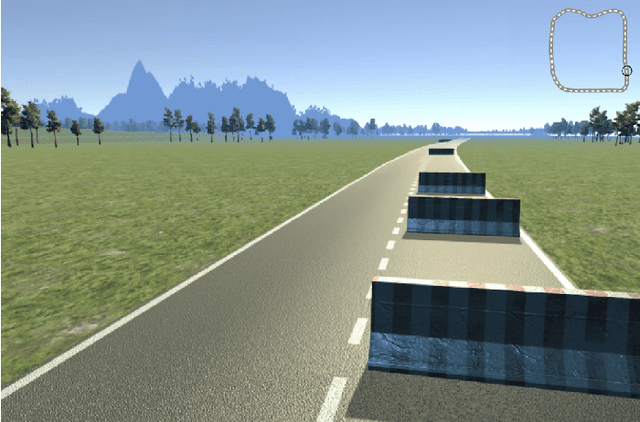

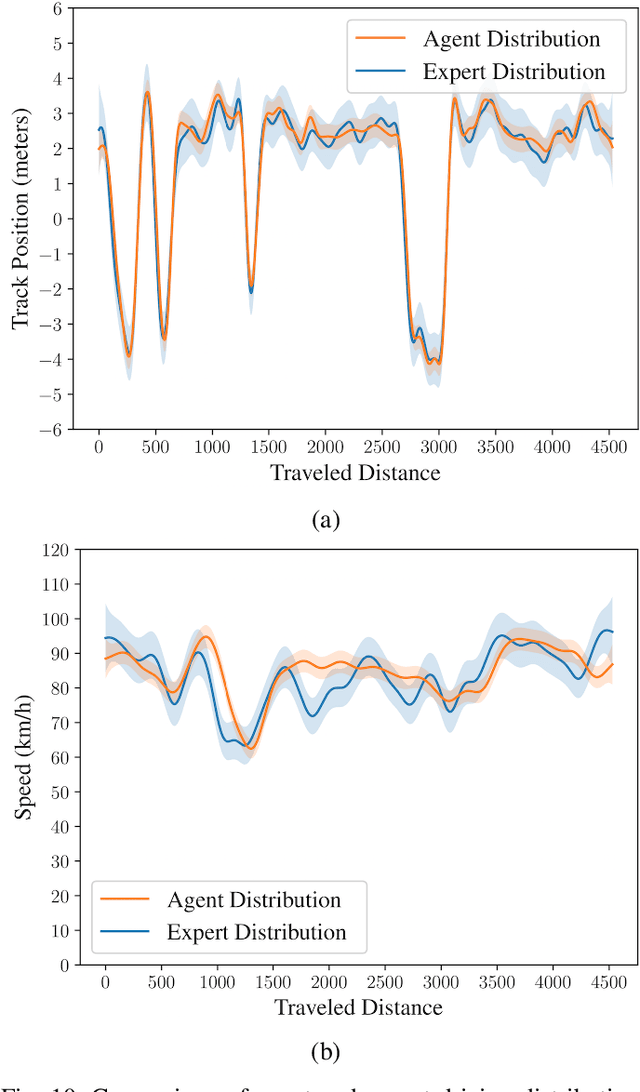

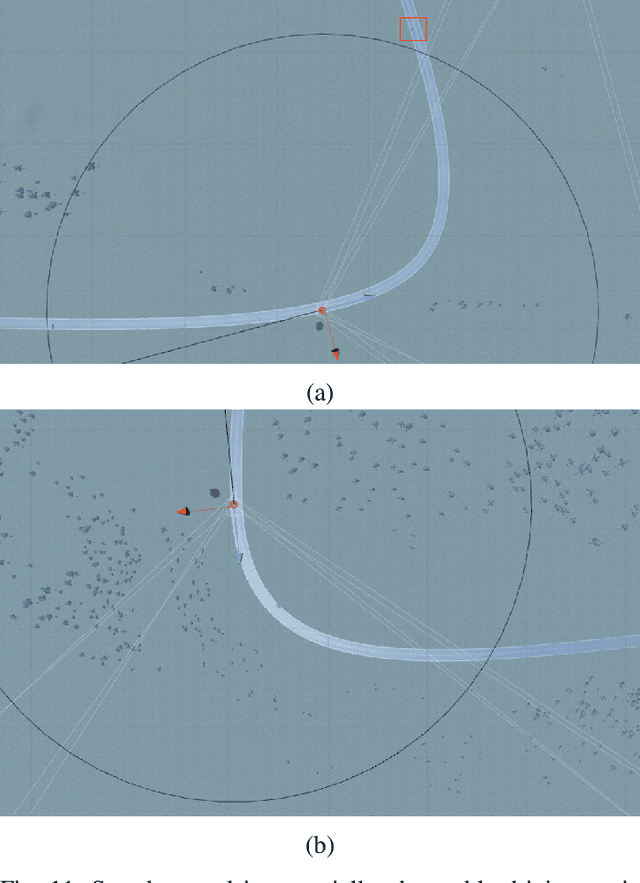

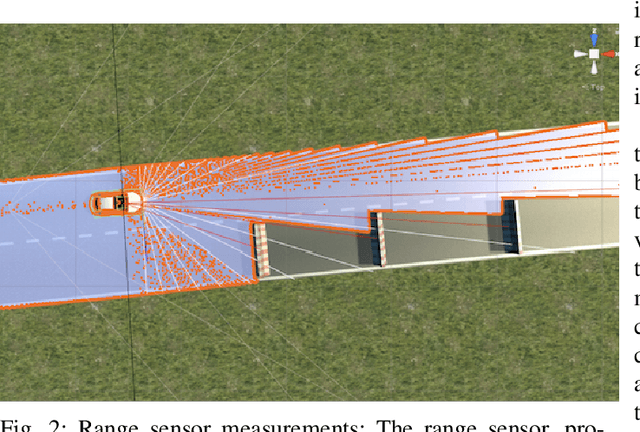

The technological and scientific challenges involved in the development of autonomous vehicles (AVs) are currently of primary interest for many automobile companies and research labs. However, human-controlled vehicles are likely to remain on the roads for several decades to come and may share with AVs the traffic environments of the future. In such mixed environments, AVs should deploy human-like driving policies and negotiation skills to enable smooth traffic flow. To generate automated human-like driving policies, we introduce a model-free, deep reinforcement learning approach to imitate an experienced human driver's behavior. We study a static obstacle avoidance task on a two-lane highway road in simulation (Unity). Our control algorithm receives a stochastic feedback signal from two sources: a model-driven part, encoding simple driving rules, such as lane-keeping and speed control, and a stochastic, data-driven part, incorporating human expert knowledge from driving data. To assess the similarity between machine and human driving, we model distributions of track position and speed as Gaussian processes. We demonstrate that our approach leads to human-like driving policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge