Deep Learning Inference Frameworks Benchmark

Paper and Code

Oct 09, 2022

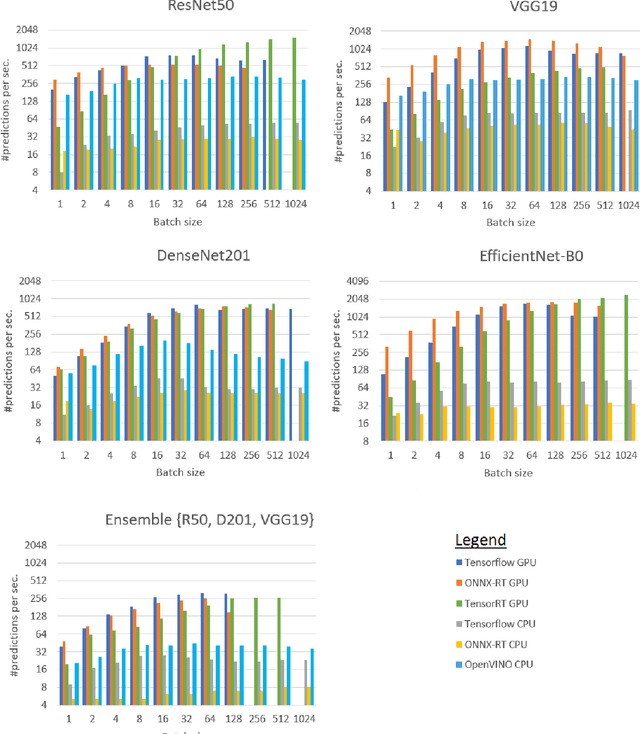

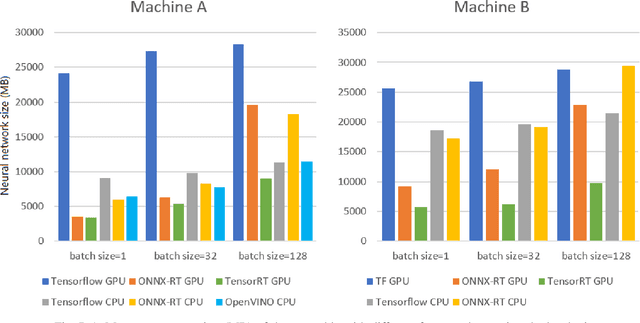

Deep learning (DL) has been widely adopted those last years but they are computing-intensive method. Therefore, scientists proposed diverse optimization to accelerate their predictions for end-user applications. However, no single inference framework currently dominates in terms of performance. This paper takes a holistic approach to conduct an empirical comparison and analysis of four representative DL inference frameworks. First, given a selection of CPU-GPU configurations, we show that for a specific DL framework, different configurations of its settings may have a significant impact on the prediction speed, memory, and computing power. Second, to the best of our knowledge, this study is the first to identify the opportunities for accelerating the ensemble of co-localized models in the same GPU. This measurement study provides an in-depth empirical comparison and analysis of four representative DL frameworks and offers practical guidance for service providers to deploy and deliver DL predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge