Deep Learning Approximation of Diffeomorphisms via Linear-Control Systems

Paper and Code

Oct 24, 2021

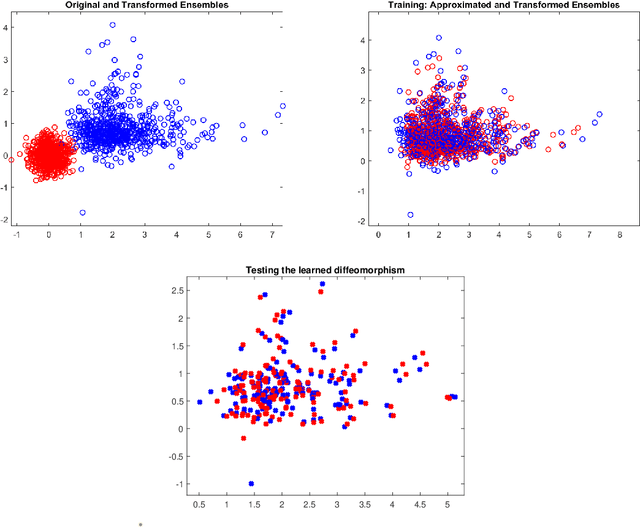

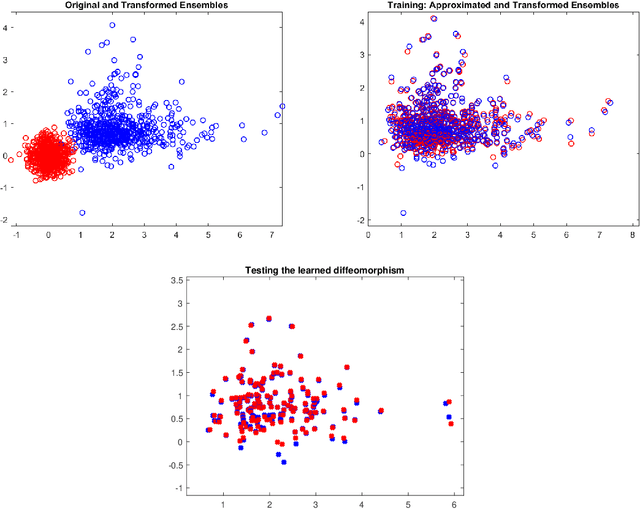

In this paper we propose a Deep Learning architecture to approximate diffeomorphisms isotopic to the identity. We consider a control system of the form $\dot x = \sum_{i=1}^lF_i(x)u_i$, with linear dependence in the controls, and we use the corresponding flow to approximate the action of a diffeomorphism on a compact ensemble of points. Despite the simplicity of the control system, it has been recently shown that a Universal Approximation Property holds. The problem of minimizing the sum of the training error and of a regularizing term induces a gradient flow in the space of admissible controls. A possible training procedure for the discrete-time neural network consists in projecting the gradient flow onto a finite-dimensional subspace of the admissible controls. An alternative approach relies on an iterative method based on Pontryagin Maximum Principle for the numerical resolution of Optimal Control problems. Here the maximization of the Hamiltonian can be carried out with an extremely low computational effort, owing to the linear dependence of the system in the control variables.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge