Deep Image Compositing

Paper and Code

Mar 29, 2021

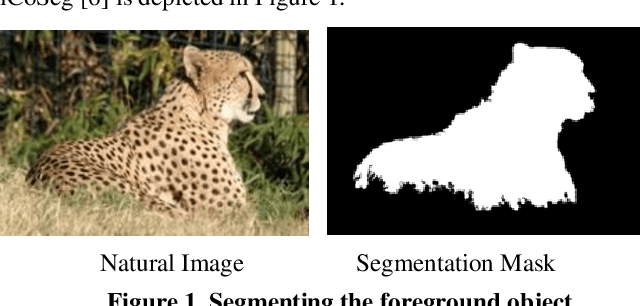

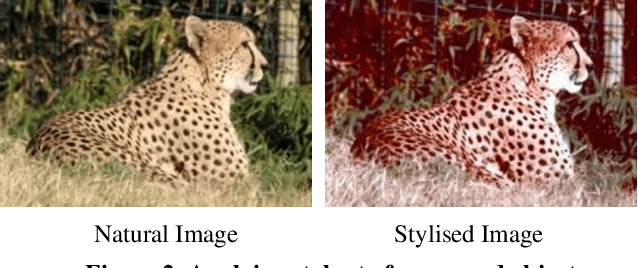

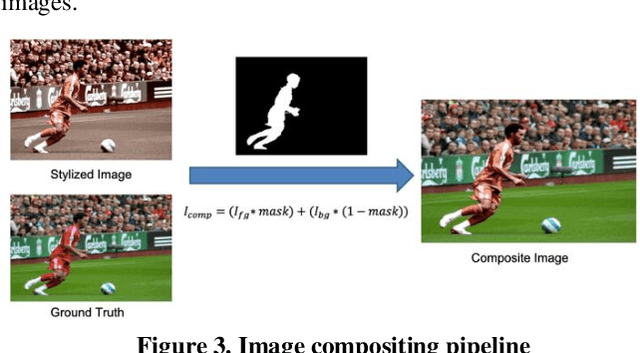

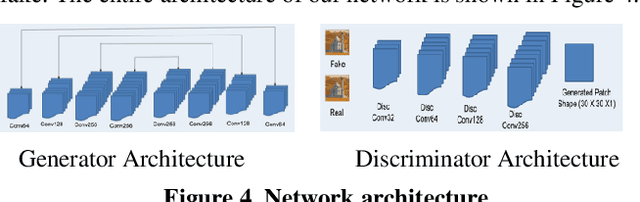

In image editing, the most common task is pasting objects from one image to the other and then eventually adjusting the manifestation of the foreground object with the background object. This task is called image compositing. But image compositing is a challenging problem that requires professional editing skills and a considerable amount of time. Not only these professionals are expensive to hire, but the tools (like Adobe Photoshop) used for doing such tasks are also expensive to purchase making the overall task of image compositing difficult for people without this skillset. In this work, we aim to cater to this problem by making composite images look realistic. To achieve this, we are using Generative Adversarial Networks (GANS). By training the network with a diverse range of filters applied to the images and special loss functions, the model is able to decode the color histogram of the foreground and background part of the image and also learns to blend the foreground object with the background. The hue and saturation values of the image play an important role as discussed in this paper. To the best of our knowledge, this is the first work that uses GANs for the task of image compositing. Currently, there is no benchmark dataset available for image compositing. So we created the dataset and will also make the dataset publicly available for benchmarking. Experimental results on this dataset show that our method outperforms all current state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge