Deep Faster Detection of Faint Edges in Noisy Images

Paper and Code

Mar 26, 2018

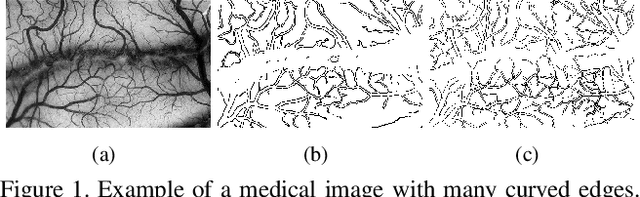

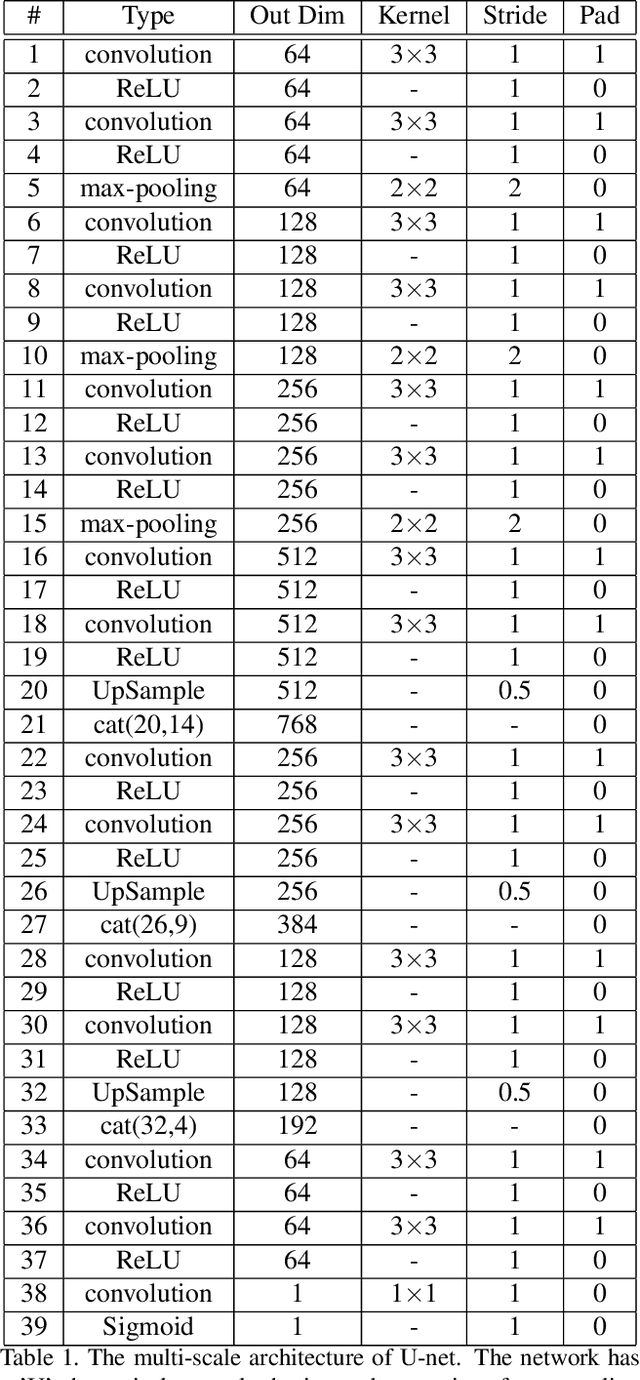

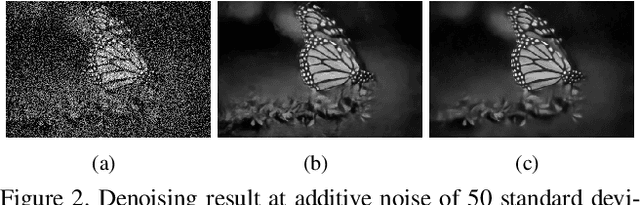

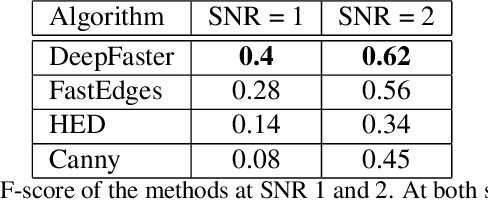

Detection of faint edges in noisy images is a challenging problem studied in the last decades. \cite{ofir2016fast} introduced a fast method to detect faint edges in the highest accuracy among all the existing approaches. Their complexity is nearly linear in the image's pixels and their runtime is seconds for a noisy image. By utilizing the U-net architecture \cite{unet}, we show in this paper that their method can be dramatically improved in both aspects of run time and accuracy. By training the network on a dataset of binary images, we develop a method for faint edge detection that works in a linear complexity. Our runtime on a noisy image is milliseconds on a GPU. Even though our method is orders of magnitude faster, we still achieve higher accuracy of detection under many challenging scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge