Deep Autoencoder with SVD-Like Convergence and Flat Minima

Paper and Code

Oct 23, 2024

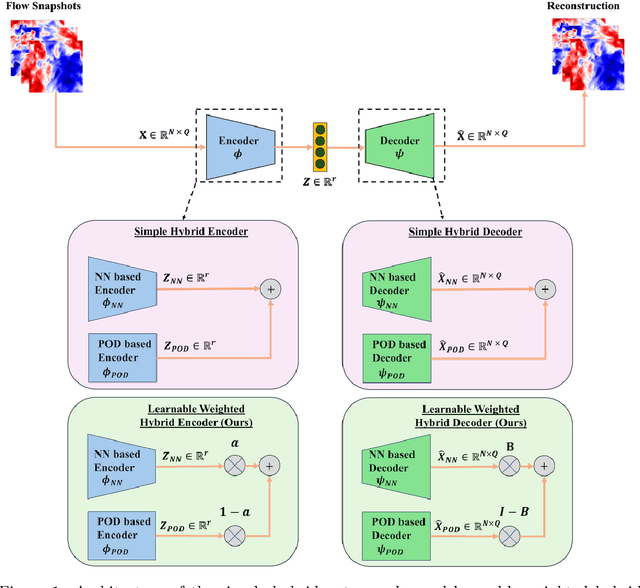

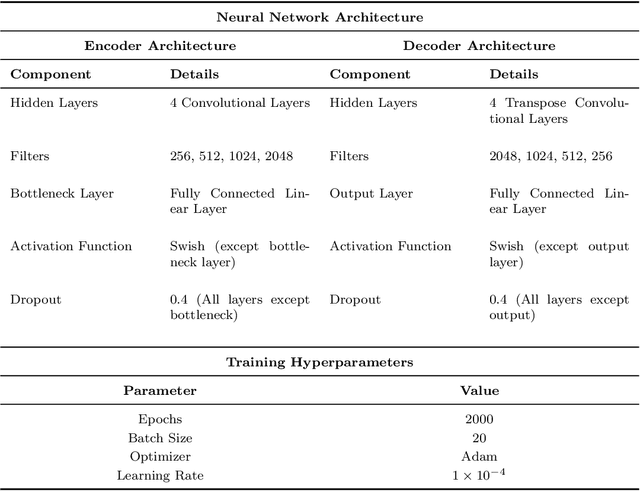

Representation learning for high-dimensional, complex physical systems aims to identify a low-dimensional intrinsic latent space, which is crucial for reduced-order modeling and modal analysis. To overcome the well-known Kolmogorov barrier, deep autoencoders (AEs) have been introduced in recent years, but they often suffer from poor convergence behavior as the rank of the latent space increases. To address this issue, we propose the learnable weighted hybrid autoencoder, a hybrid approach that combines the strengths of singular value decomposition (SVD) with deep autoencoders through a learnable weighted framework. We find that the introduction of learnable weighting parameters is essential - without them, the resulting model would either collapse into a standard POD or fail to exhibit the desired convergence behavior. Additionally, we empirically find that our trained model has a sharpness thousands of times smaller compared to other models. Our experiments on classical chaotic PDE systems, including the 1D Kuramoto-Sivashinsky and forced isotropic turbulence datasets, demonstrate that our approach significantly improves generalization performance compared to several competing methods, paving the way for robust representation learning of high-dimensional, complex physical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge