Deconvolutional Feature Stacking for Weakly-Supervised Semantic Segmentation

Paper and Code

Mar 12, 2016

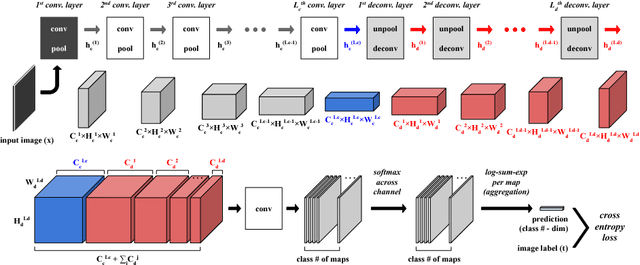

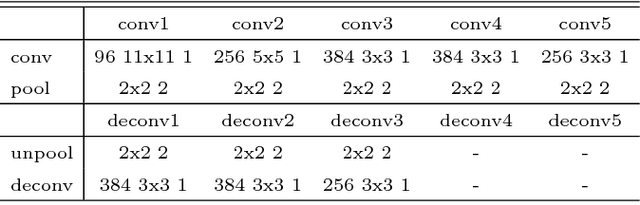

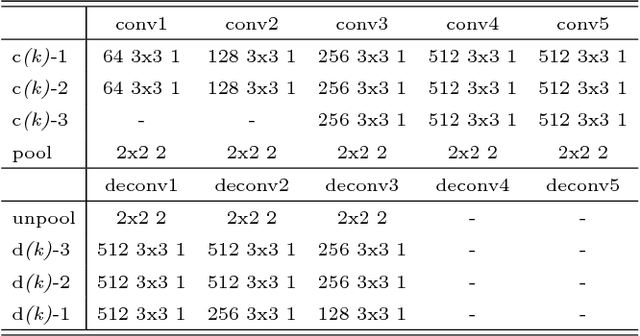

A weakly-supervised semantic segmentation framework with a tied deconvolutional neural network is presented. Each deconvolution layer in the framework consists of unpooling and deconvolution operations. 'Unpooling' upsamples the input feature map based on unpooling switches defined by corresponding convolution layer's pooling operation. 'Deconvolution' convolves the input unpooled features by using convolutional weights tied with the corresponding convolution layer's convolution operation. The unpooling-deconvolution combination helps to eliminate less discriminative features in a feature extraction stage, since output features of the deconvolution layer are reconstructed from the most discriminative unpooled features instead of the raw one. This results in reduction of false positives in a pixel-level inference stage. All the feature maps restored from the entire deconvolution layers can constitute a rich discriminative feature set according to different abstraction levels. Those features are stacked to be selectively used for generating class-specific activation maps. Under the weak supervision (image-level labels), the proposed framework shows promising results on lesion segmentation in medical images (chest X-rays) and achieves state-of-the-art performance on the PASCAL VOC segmentation dataset in the same experimental condition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge