De-Confusing Pseudo-Labels in Source-Free Domain Adaptation

Paper and Code

Jan 03, 2024

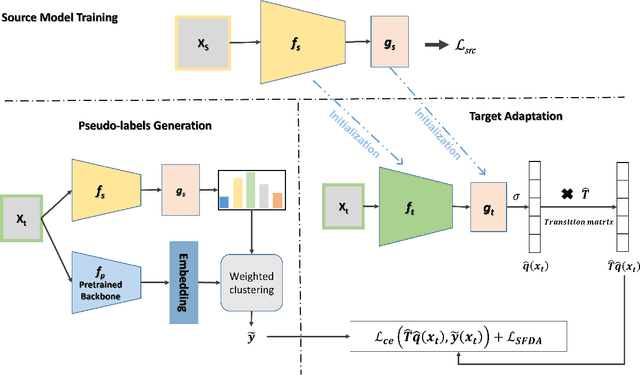

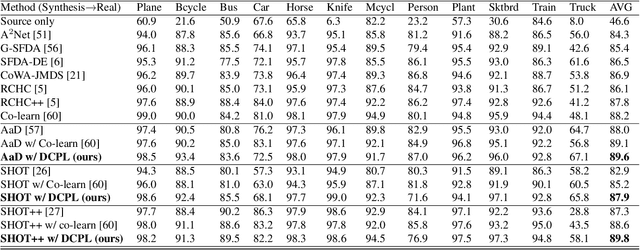

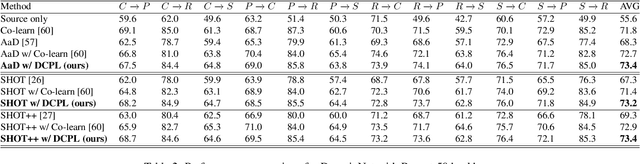

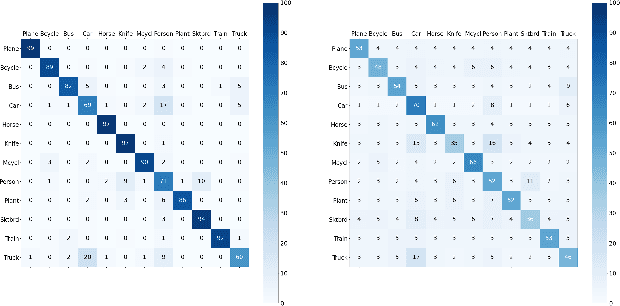

Source-free domain adaptation (SFDA) aims to transfer knowledge learned from a source domain to an unlabeled target domain, where the source data is unavailable during adaptation. Existing approaches for SFDA focus on self-training usually including well-established entropy minimization and pseudo-labeling techniques. Recent work suggested a co-learning strategy to improve the quality of the generated target pseudo-labels using robust pretrained networks such as Swin-B. However, since the generated pseudo-labels depend on the source model, they may be noisy due to domain shift. In this paper, we view SFDA from the perspective of label noise learning and learn to de-confuse the pseudo-labels. More specifically, we learn a noise transition matrix of the pseudo-labels to capture the label corruption of each class and learn the underlying true label distribution. Estimating the noise transition matrix enables a better true class-posterior estimation results with better prediction accuracy. We demonstrate the effectiveness of our approach applied with several SFDA methods: SHOT, SHOT++, and AaD. We obtain state-of-the-art results on three domain adaptation datasets: VisDA, DomainNet, and OfficeHome.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge