Data Poisoning Attacks on Neighborhood-based Recommender Systems

Paper and Code

Dec 01, 2019

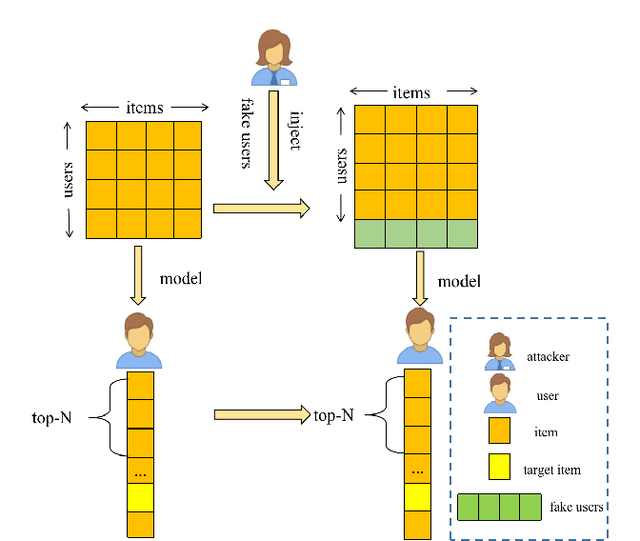

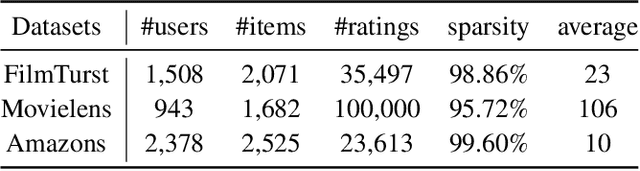

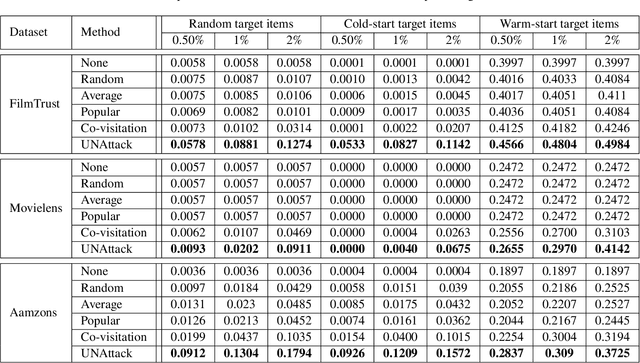

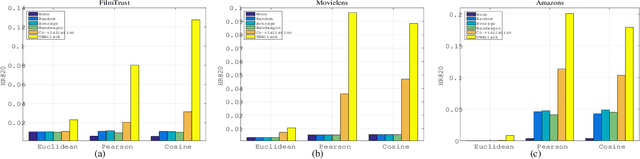

Nowadays, collaborative filtering recommender systems have been widely deployed in many commercial companies to make profit. Neighbourhood-based collaborative filtering is common and effective. To date, despite its effectiveness, there has been little effort to explore their robustness and the impact of data poisoning attacks on their performance. Can the neighbourhood-based recommender systems be easily fooled? To this end, we shed light on the robustness of neighbourhood-based recommender systems and propose a novel data poisoning attack framework encoding the purpose of attack and constraint against them. We firstly illustrate how to calculate the optimal data poisoning attack, namely UNAttack. We inject a few well-designed fake users into the recommender systems such that target items will be recommended to as many normal users as possible. Extensive experiments are conducted on three real-world datasets to validate the effectiveness and the transferability of our proposed method. Besides, some interesting phenomenons can be found. For example, 1) neighbourhood-based recommender systems with Euclidean Distance-based similarity have strong robustness. 2) the fake users can be transferred to attack the state-of-the-art collaborative filtering recommender systems such as Neural Collaborative Filtering and Bayesian Personalized Ranking Matrix Factorization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge