DAGformer: Directed Acyclic Graph Transformer

Paper and Code

Oct 25, 2022

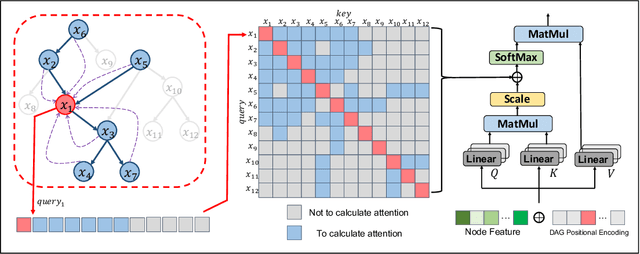

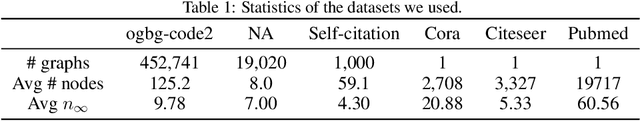

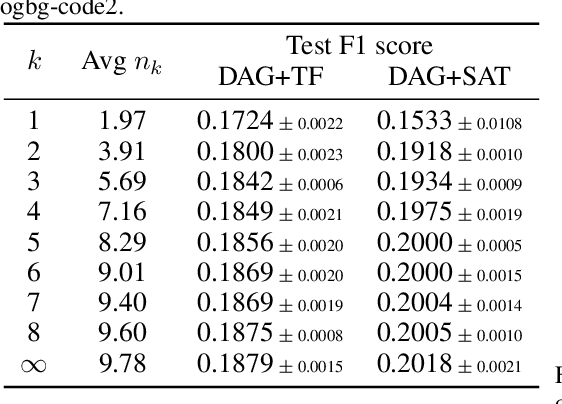

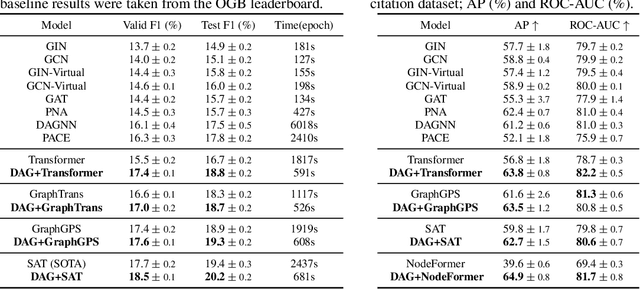

In many fields, such as natural language processing and computer vision, the Transformer architecture has become the standard. Recently, the Transformer architecture has also attracted a growing amount of interest in graph representation learning since it naturally overcomes some graph neural network (GNNs) restrictions. In this work, we focus on a special yet widely used class of graphs-DAGs. We propose the directed acyclic graph Transformer, DAGformer, a Transformer architecture that processes information according to the reachability relation defined by the partial order. DAGformer is simple and flexible, allowing it to be used with various transformer-based models. We show that our architecture achieves state-of-the-art performance on representative DAG datasets, outperforming all previous approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge