Critical Assessment of Transfer Learning for Medical Image Segmentation with Fully Convolutional Neural Networks

Paper and Code

May 30, 2020

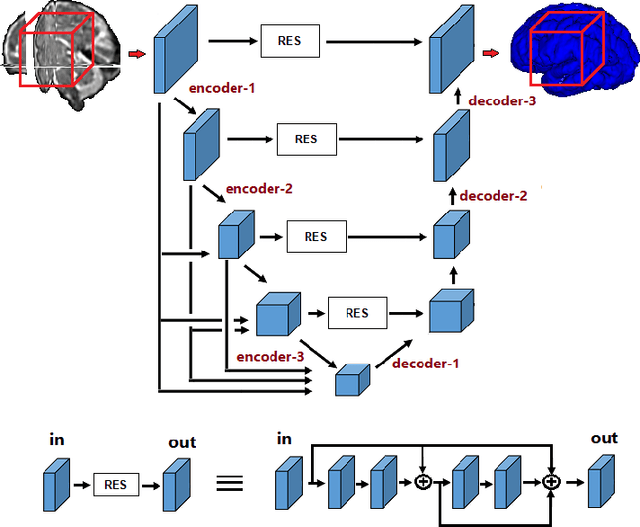

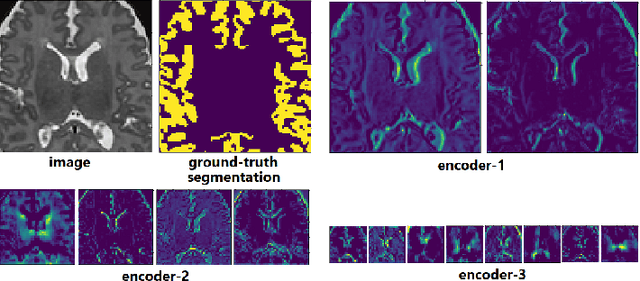

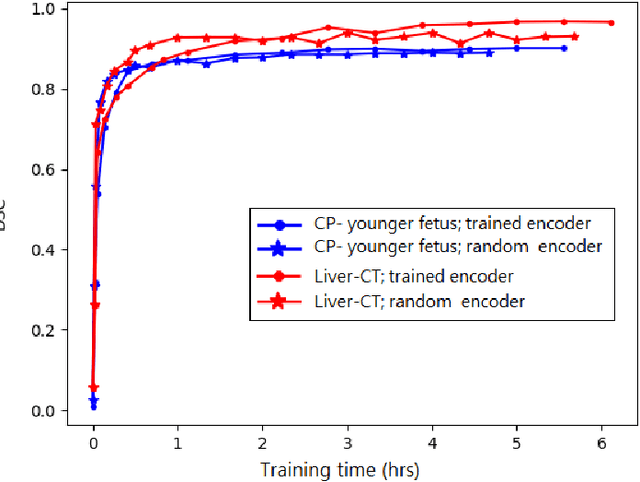

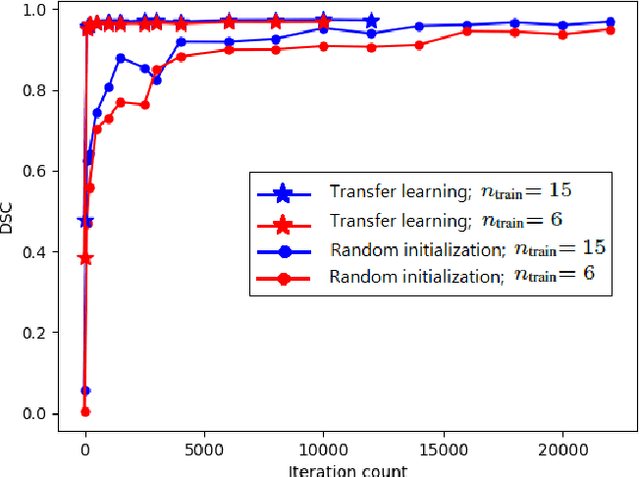

Transfer learning is widely used for training machine learning models. Here, we study the role of transfer learning for training fully convolutional networks (FCNs) for medical image segmentation. Our experiments show that although transfer learning reduces the training time on the target task, the improvement in segmentation accuracy is highly task/data-dependent. Larger improvements in accuracy are observed when the segmentation task is more challenging and the target training data is smaller. We observe that convolutional filters of an FCN change little during training for medical image segmentation, and still look random at convergence. We further show that quite accurate FCNs can be built by freezing the encoder section of the network at random values and only training the decoder section. At least for medical image segmentation, this finding challenges the common belief that the encoder section needs to learn data/task-specific representations. We examine the evolution of FCN representations to gain a better insight into the effects of transfer learning on the training dynamics. Our analysis shows that although FCNs trained via transfer learning learn different representations than FCNs trained with random initialization, the variability among FCNs trained via transfer learning can be as high as that among FCNs trained with random initialization. Moreover, feature reuse is not restricted to the early encoder layers; rather, it can be more significant in deeper layers. These findings offer new insights and suggest alternative ways of training FCNs for medical image segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge