Coordinate Descent Methods for Fractional Minimization

Paper and Code

Jan 30, 2022

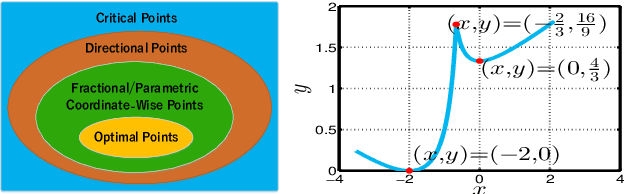

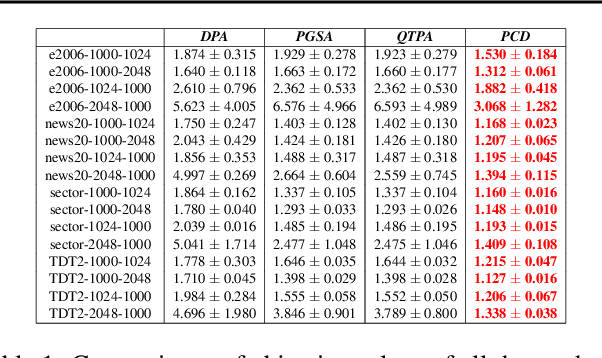

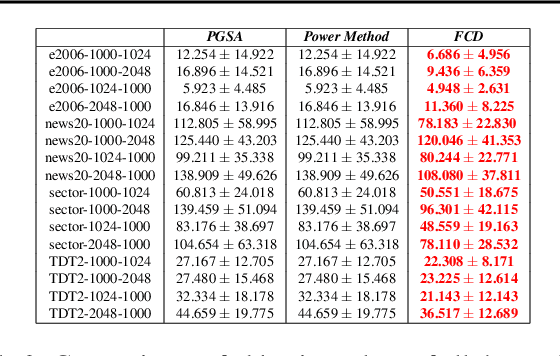

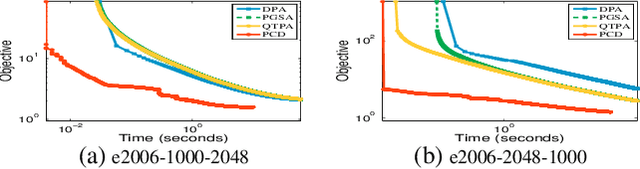

We consider a class of structured fractional minimization problems, in which the numerator part of the objective is the sum of a differentiable convex function and a convex nonsmooth function, while the denominator part is a concave or convex function. This problem is difficult to solve since it is nonconvex. By exploiting the structure of the problem, we propose two Coordinate Descent (CD) methods for solving this problem. One is applied to the original fractional function, the other is based on the associated parametric problem. The proposed methods iteratively solve a one-dimensional subproblem \textit{globally}, and they are guaranteed to converge to coordinate-wise stationary points. In the case of a convex denominator, we prove that the proposed CD methods using sequential nonconvex approximation find stronger stationary points than existing methods. Under suitable conditions, CD methods with an appropriate initialization converge linearly to the optimal point (also the coordinate-wise stationary point). In the case of a concave denominator, we show that the resulting problem is quasi-convex, and any critical point is a global minimum. We prove that the algorithms converge to the global optimal solution with a sublinear convergence rate. We demonstrate the applicability of the proposed methods to some machine learning and signal processing models. Our experiments on real-world data have shown that our method significantly and consistently outperforms existing methods in terms of accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge