ConvSRC: SmartPhone based Periocular Recognition using Deep Convolutional Neural Network and Sparsity Augmented Collaborative Representation

Paper and Code

Jan 16, 2018

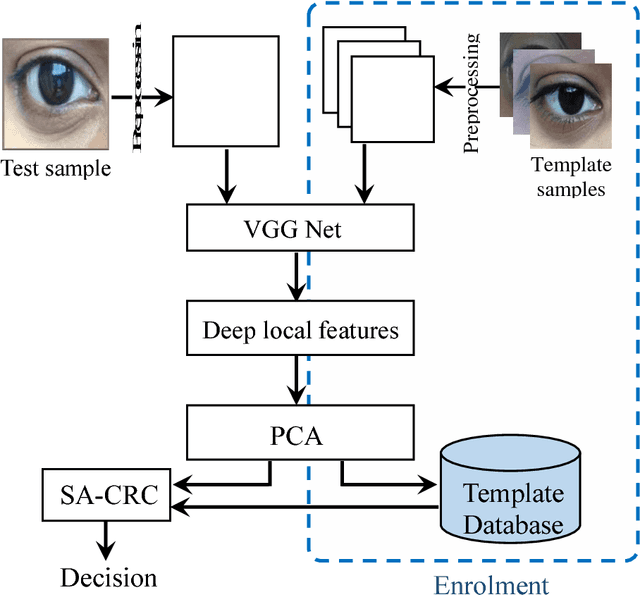

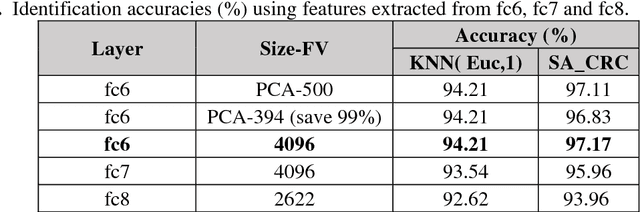

Smartphone based periocular recognition has gained significant attention from biometric research community because of the limitations of biometric modalities like face, iris etc. Most of the existing methods for periocular recognition employ hand-crafted features. Recently, learning based image representation techniques like deep Convolutional Neural Network (CNN) have shown outstanding performance in many visual recognition tasks. CNN needs a huge volume of data for its learning, but for periocular recognition only limited amount of data is available. The solution is to use CNN pre-trained on the dataset from the related domain, in this case the challenge is to extract efficiently the discriminative features. Using a pertained CNN model (VGG-Net), we propose a simple, efficient and compact image representation technique that takes into account the wealth of information and sparsity existing in the activations of the convolutional layers and employs principle component analysis. For recognition, we use an efficient and robust Sparse Augmented Collaborative Representation based Classification (SA-CRC) technique. For thorough evaluation of ConvSRC (the proposed system), experiments were carried out on the VISOB challenging database which was presented for periocular recognition competition in ICIP2016. The obtained results show the superiority of ConvSRC over the state-of-the-art methods; it obtains a GMR of more than 99% at FMR = 10-3 and outperforms the first winner of ICIP2016 challenge by 10%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge