Convergence Rates for Multi-classs Logistic Regression Near Minimum

Paper and Code

Jan 10, 2021

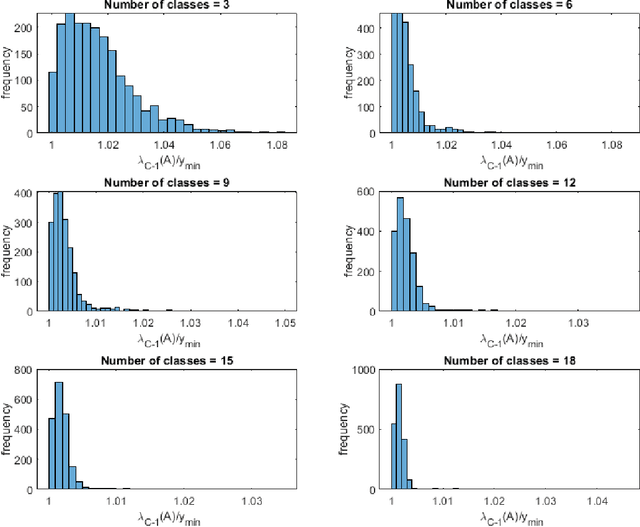

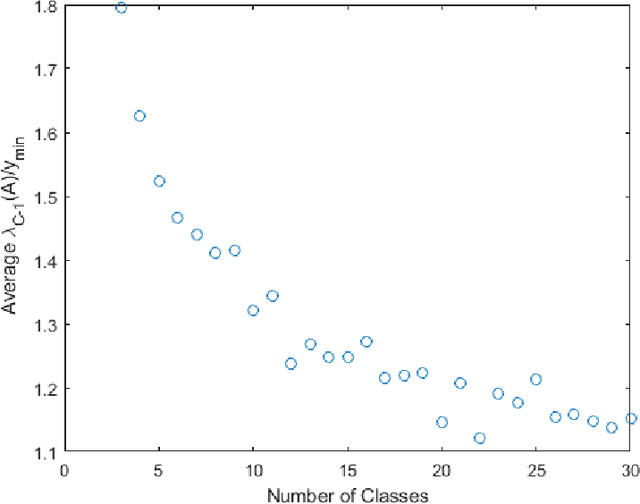

Training a neural network is typically done via variations of gradient descent. If a minimum of the loss function exists and gradient descent is used as the training method, we provide an expression that relates learning rate to the rate of convergence to the minimum. We also discuss existence of a minimum.

* 13 pages, 2 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge