Control and Coordination of a SWARM of Unmanned Surface Vehicles using Deep Reinforcement Learning in ROS

Paper and Code

Apr 17, 2023

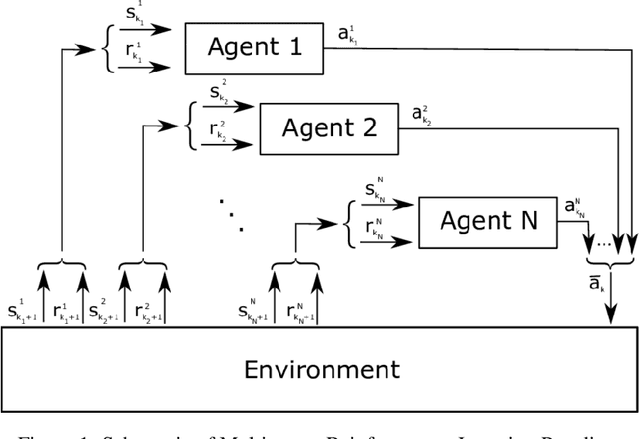

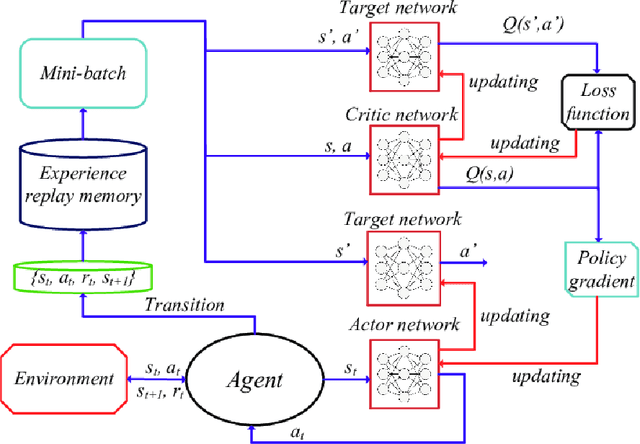

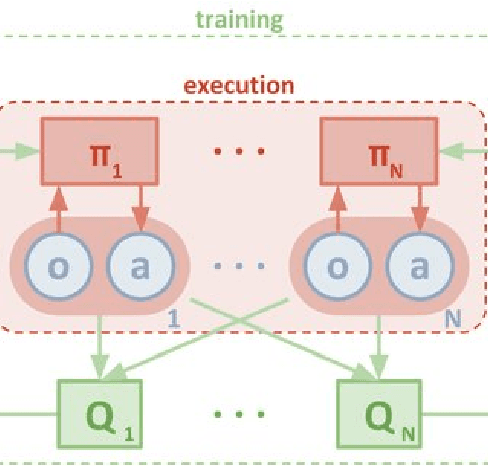

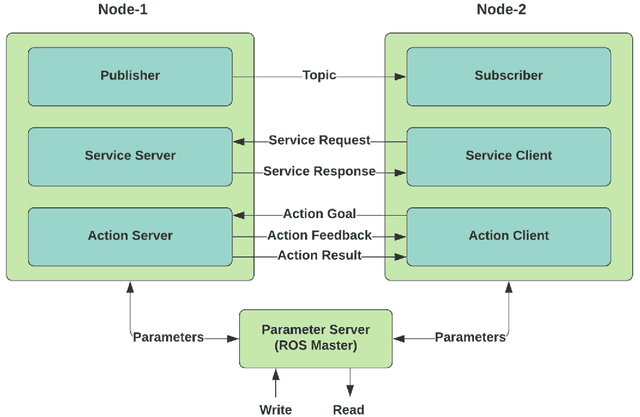

An unmanned surface vehicle (USV) can perform complex missions by continuously observing the state of its surroundings and taking action toward a goal. A SWARM of USVs working together can complete missions faster, and more effectively than a single USV alone. In this paper, we propose an autonomous communication model for a swarm of USVs. The goal of this system is to implement a software system using Robot Operating System (ROS) and Gazebo. With the main objective of coordinated task completion, the Markov decision process (MDP) provides a base to formulate a task decision problem to achieve efficient localization and tracking in a highly dynamic water environment. To coordinate multiple USVs performing real-time target tracking, we propose an enhanced multi-agent reinforcement learning approach. Our proposed scheme uses MA-DDPG, or Multi-Agent Deep Deterministic Policy Gradient, an extension of the Deep Deterministic Policy Gradients (DDPG) algorithm that allows for decentralized control of multiple agents in a cooperative environment. MA-DDPG's decentralised control allows each and every agent to make decisions based on its own observations and objectives, which can lead to superior gross performance and improved stability. Additionally, it provides communication and coordination among agents through the use of collective readings and rewards.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge