Contrastive Learning of Musical Representations

Paper and Code

Mar 17, 2021

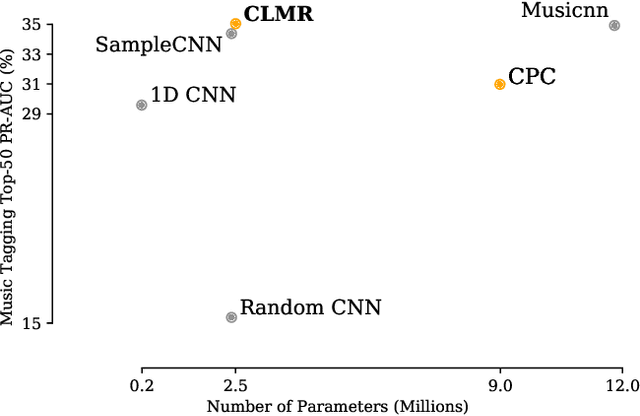

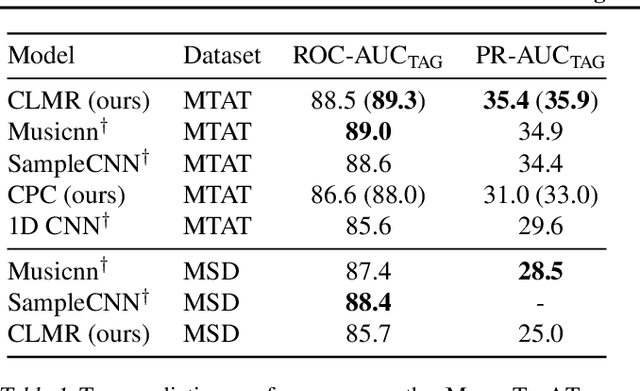

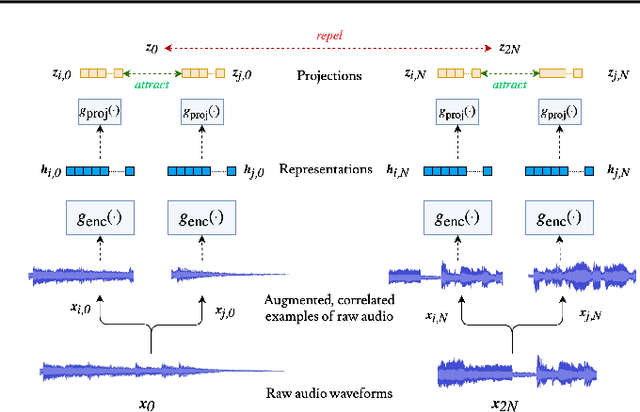

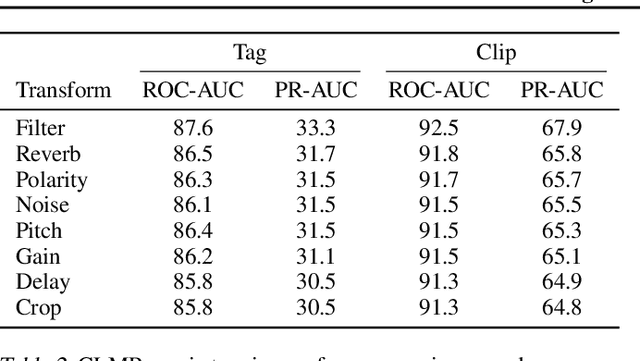

While supervised learning has enabled great advances in many areas of music, labeled music datasets remain especially hard, expensive and time-consuming to create. In this work, we introduce SimCLR to the music domain and contribute a large chain of audio data augmentations, to form a simple framework for self-supervised learning of raw waveforms of music: CLMR. This approach requires no manual labeling and no preprocessing of music to learn useful representations. We evaluate CLMR in the downstream task of music classification on the MagnaTagATune and Million Song datasets. A linear classifier fine-tuned on representations from a pre-trained CLMR model achieves an average precision of 35.4% on the MagnaTagATune dataset, superseding fully supervised models that currently achieve a score of 34.9%. Moreover, we show that CLMR's representations are transferable using out-of-domain datasets, indicating that they capture important musical knowledge. Lastly, we show that self-supervised pre-training allows us to learn efficiently on smaller labeled datasets: we still achieve a score of 33.1% despite using only 259 labeled songs during fine-tuning. To foster reproducibility and future research on self-supervised learning in music, we publicly release the pre-trained models and the source code of all experiments of this paper on GitHub.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge