Continuous Emotion Recognition via Deep Convolutional Autoencoder and Support Vector Regressor

Paper and Code

Jan 31, 2020

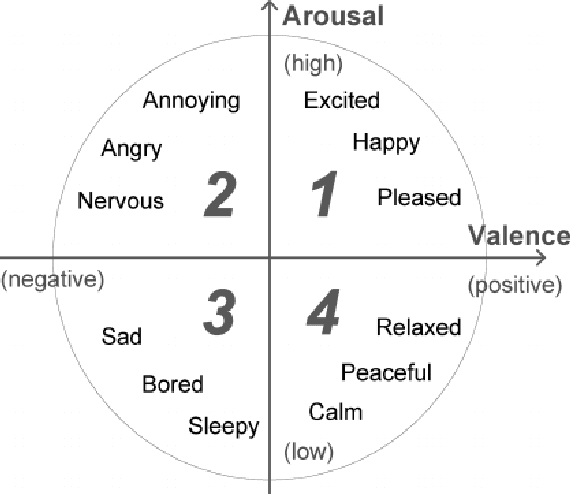

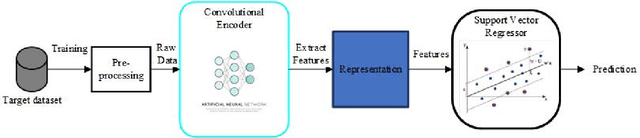

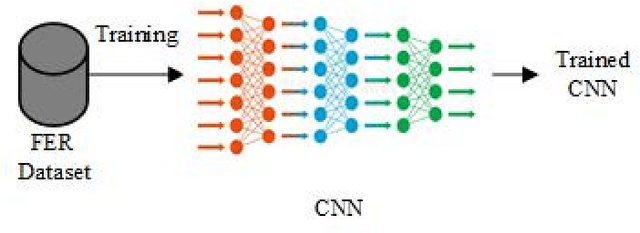

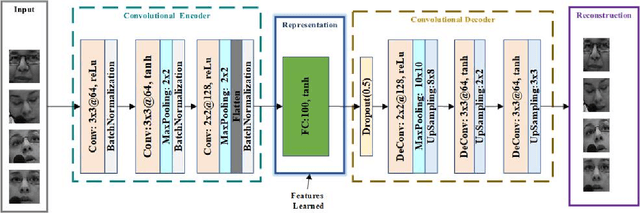

Automatic facial expression recognition is an important research area in the emotion recognition and computer vision. Applications can be found in several domains such as medical treatment, driver fatigue surveillance, sociable robotics, and several other human-computer interaction systems. Therefore, it is crucial that the machine should be able to recognize the emotional state of the user with high accuracy. In recent years, deep neural networks have been used with great success in recognizing emotions. In this paper, we present a new model for continuous emotion recognition based on facial expression recognition by using an unsupervised learning approach based on transfer learning and autoencoders. The proposed approach also includes preprocessing and post-processing techniques which contribute favorably to improving the performance of predicting the concordance correlation coefficient for arousal and valence dimensions. Experimental results for predicting spontaneous and natural emotions on the RECOLA 2016 dataset have shown that the proposed approach based on visual information can achieve CCCs of 0.516 and 0.264 for valence and arousal, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge