Context-Independent Polyphonic Piano Onset Transcription with an Infinite Training Dataset

Paper and Code

Jul 26, 2017

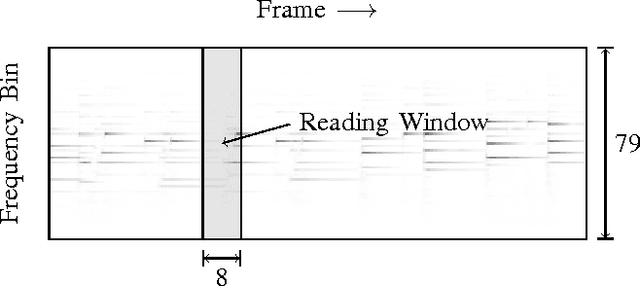

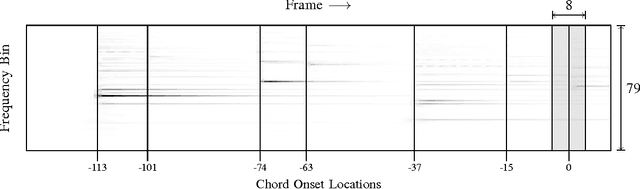

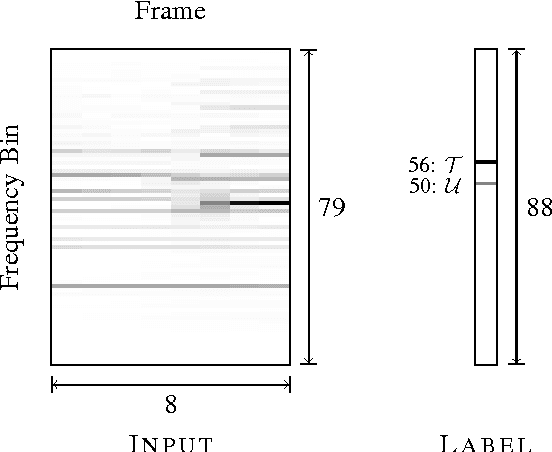

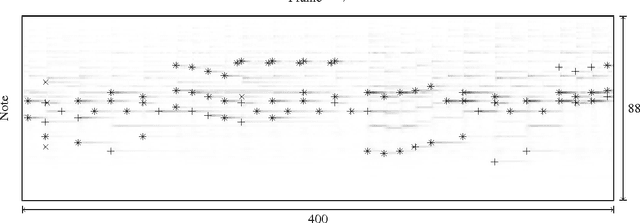

Many of the recent approaches to polyphonic piano note onset transcription require training a machine learning model on a large piano database. However, such approaches are limited by dataset availability; additional training data is difficult to produce, and proposed systems often perform poorly on novel recording conditions. We propose a method to quickly synthesize arbitrary quantities of training data, avoiding the need for curating large datasets. Various aspects of piano note dynamics - including nonlinearity of note signatures with velocity, different articulations, temporal clustering of onsets, and nonlinear note partial interference - are modeled to match the characteristics of real pianos. Our method also avoids the disentanglement problem, a recently noted issue affecting machine-learning based approaches. We train a feed-forward neural network with two hidden layers on our generated training data and achieve both good transcription performance on the large MAPS piano dataset and excellent generalization qualities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge