Confounding variables can degrade generalization performance of radiological deep learning models

Paper and Code

Jul 13, 2018

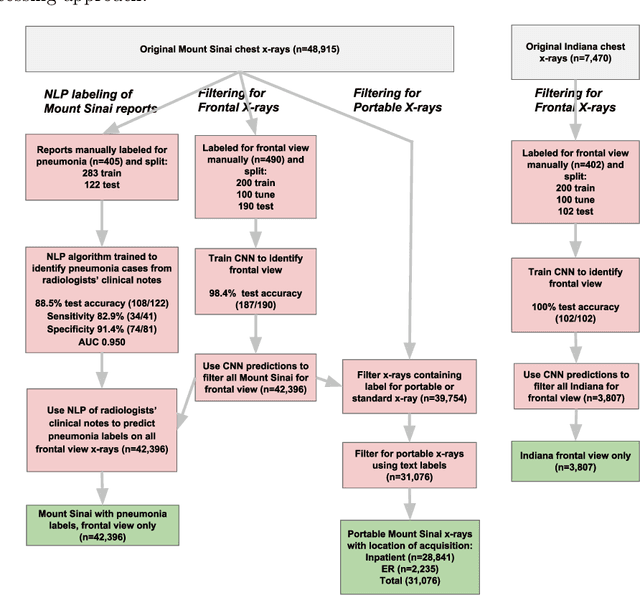

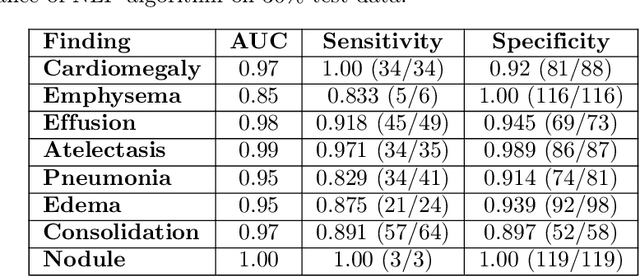

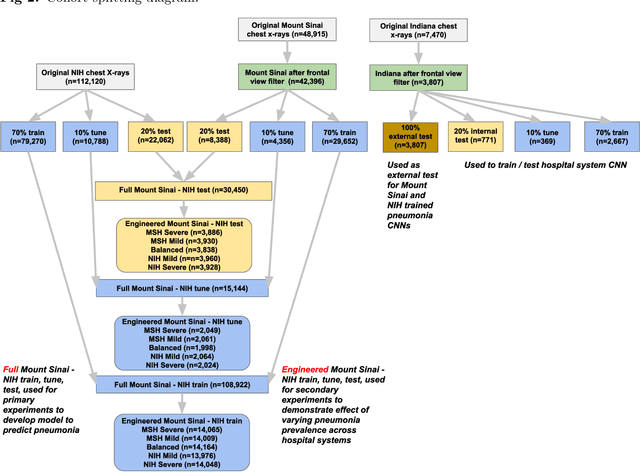

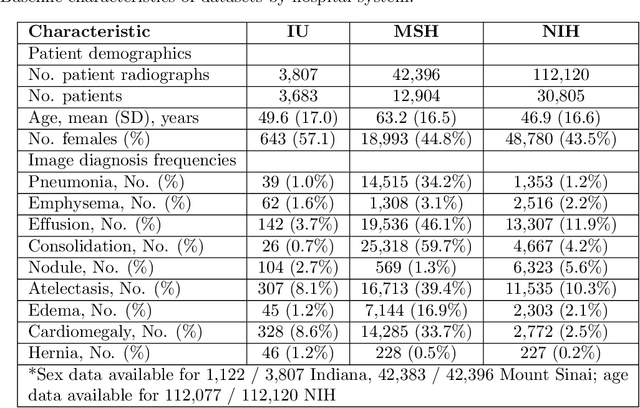

Early results in using convolutional neural networks (CNNs) on x-rays to diagnose disease have been promising, but it has not yet been shown that models trained on x-rays from one hospital or one group of hospitals will work equally well at different hospitals. Before these tools are used for computer-aided diagnosis in real-world clinical settings, we must verify their ability to generalize across a variety of hospital systems. A cross-sectional design was used to train and evaluate pneumonia screening CNNs on 158,323 chest x-rays from NIH (n=112,120 from 30,805 patients), Mount Sinai (42,396 from 12,904 patients), and Indiana (n=3,807 from 3,683 patients). In 3 / 5 natural comparisons, performance on chest x-rays from outside hospitals was significantly lower than on held-out x-rays from the original hospital systems. CNNs were able to detect where an x-ray was acquired (hospital system, hospital department) with extremely high accuracy and calibrate predictions accordingly. The performance of CNNs in diagnosing diseases on x-rays may reflect not only their ability to identify disease-specific imaging findings on x-rays, but also their ability to exploit confounding information. Estimates of CNN performance based on test data from hospital systems used for model training may overstate their likely real-world performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge