Confidence-Aware Subject-to-Subject Transfer Learning for Brain-Computer Interface

Paper and Code

Dec 15, 2021

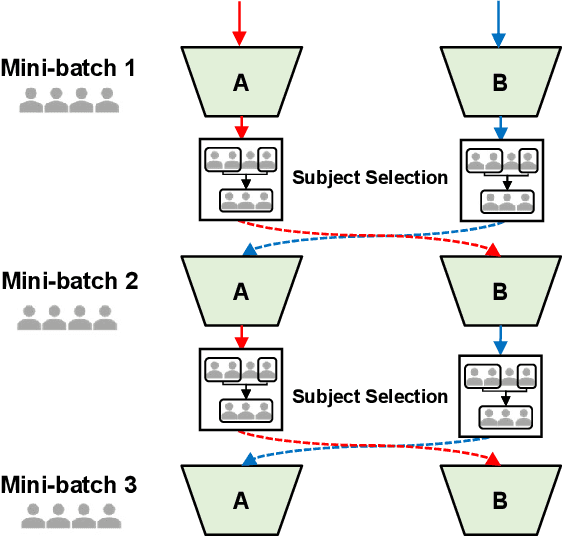

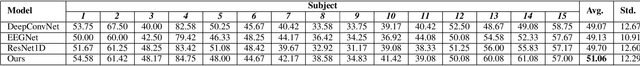

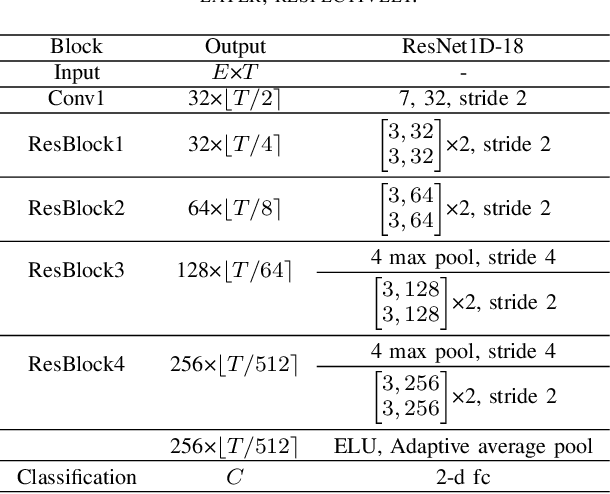

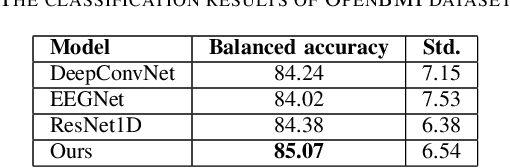

The inter/intra-subject variability of electroencephalography (EEG) makes the practical use of the brain-computer interface (BCI) difficult. In general, the BCI system requires a calibration procedure to tune the model every time the system is used. This problem is recognized as a major obstacle to BCI, and to overcome it, approaches based on transfer learning (TL) have recently emerged. However, many BCI paradigms are limited in that they consist of a structure that shows labels first and then measures "imagery", the negative effects of source subjects containing data that do not contain control signals have been ignored in many cases of the subject-to-subject TL process. The main purpose of this paper is to propose a method of excluding subjects that are expected to have a negative impact on subject-to-subject TL training, which generally uses data from as many subjects as possible. In this paper, we proposed a BCI framework using only high-confidence subjects for TL training. In our framework, a deep neural network selects useful subjects for the TL process and excludes noisy subjects, using a co-teaching algorithm based on the small-loss trick. We experimented with leave-one-subject-out validation on two public datasets (2020 international BCI competition track 4 and OpenBMI dataset). Our experimental results showed that confidence-aware TL, which selects subjects with small loss instances, improves the generalization performance of BCI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge