CoMPM: Context Modeling with Speaker's Pre-trained Memory Tracking for Emotion Recognition in Conversation

Paper and Code

Aug 26, 2021

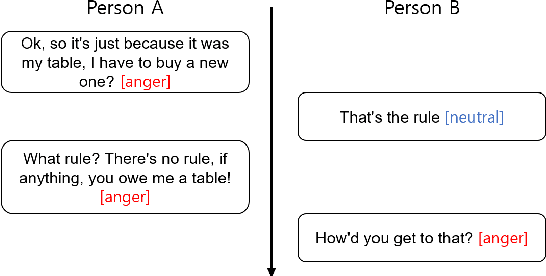

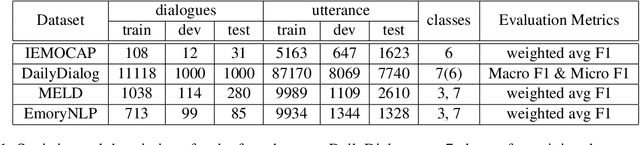

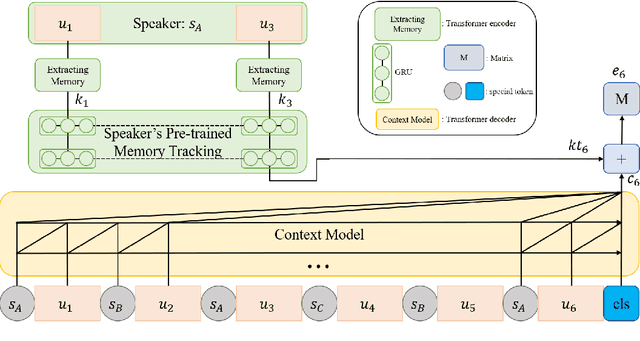

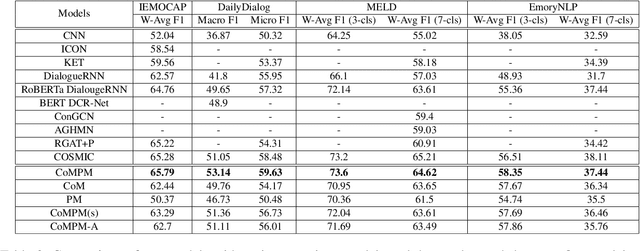

As the use of interactive machines grow, the task of Emotion Recognition in Conversation (ERC) became more important. If the machine generated sentences reflect emotion, more human-like sympathetic conversations are possible. Since emotion recognition in conversation is inaccurate if the previous utterances are not taken into account, many studies reflect the dialogue context to improve the performances. We introduce CoMPM, a context embedding module (CoM) combined with a pre-trained memory module (PM) that tracks memory of the speaker's previous utterances within the context, and show that the pre-trained memory significantly improves the final accuracy of emotion recognition. We experimented on both the multi-party datasets (MELD, EmoryNLP) and the dyadic-party datasets (IEMOCAP, DailyDialog), showing that our approach achieve competitive performance on all datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge