Comparing the Consistency of User Studies Conducted in Simulations and Laboratory Settings

Paper and Code

Oct 27, 2024

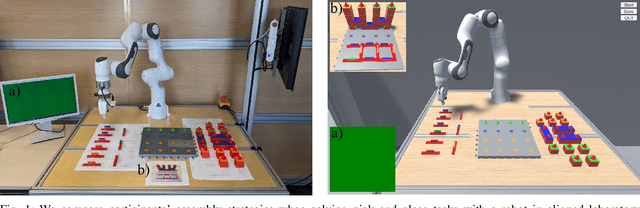

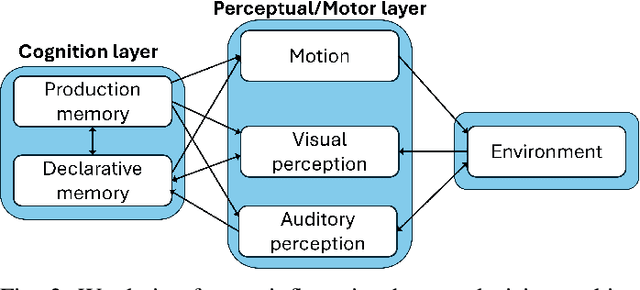

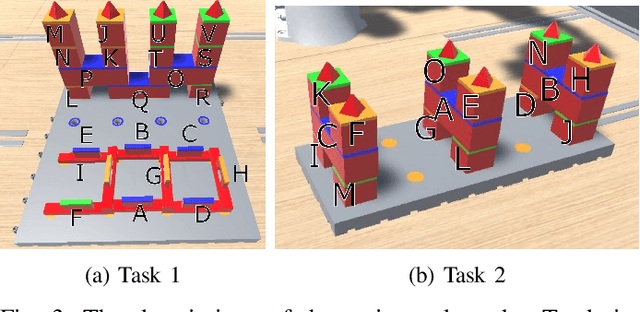

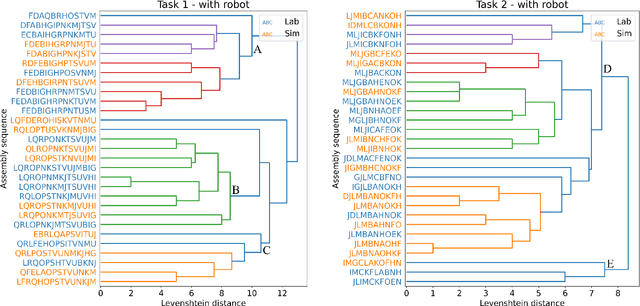

Human-robot collaboration enables highly adaptive co-working. The variety of resulting workflows makes it difficult to measure metrics as, e.g. makespans or idle times for multiple systems and tasks in a comparable manner. This issue can be addressed with virtual commissioning, where arbitrary numbers of non-deterministic human-robot workflows in assembly tasks can be simulated. To this end, data-driven models of human decisions are needed. Gathering the required large corpus of data with on-site user studies is quite time-consuming. In comparison, simulation-based studies (e.g., by crowdsourcing) would allow us to access a large pool of study participants with less effort. To rely on respective study results, human action sequences observed in a browser-based simulation environment must be shown to match those gathered in a laboratory setting. To this end, this work aims to understand to what extent cooperative assembly work in a simulated environment differs from that in an on-site laboratory setting. We show how a simulation environment can be aligned with a laboratory setting in which a robot and a human perform pick-and-place tasks together. A user study (N=29) indicates that participants' assembly decisions and perception of the situation are consistent across these different environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge