Color naming guided intrinsic image decomposition

Paper and Code

Oct 23, 2018

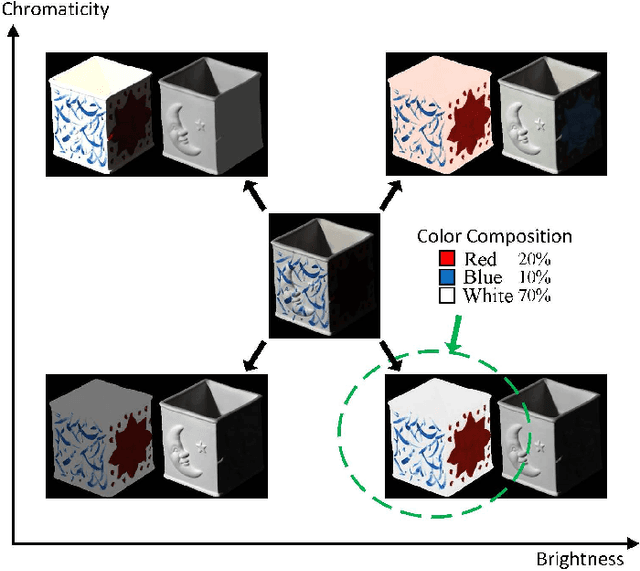

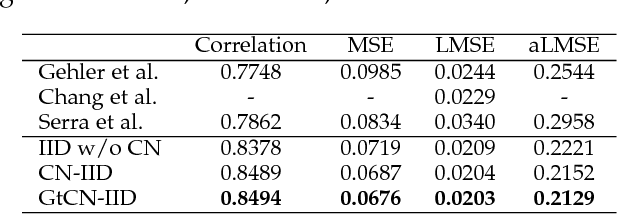

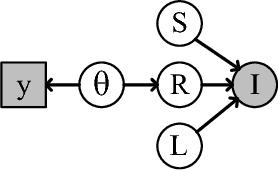

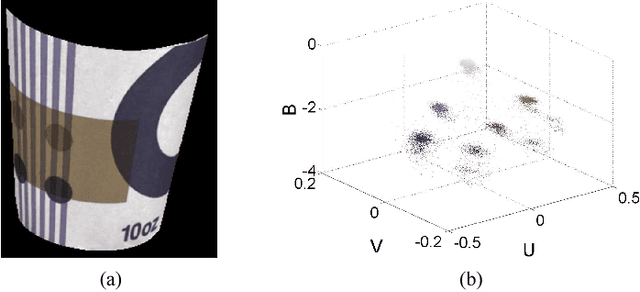

Intrinsic image decomposition is a severely under-constrained problem. User interactions can help to reduce the ambiguity of the decomposition considerably. The traditional way of user interaction is to draw scribbles that indicate regions with constant reflectance or shading. However the effect scopes of the scribbles are quite limited, so dozens of scribbles are often needed to rectify the whole decomposition, which is time consuming. In this paper we propose an efficient way of user interaction that users need only to annotate the color composition of the image. Color composition reveals the global distribution of reflectance, so it can help to adapt the whole decomposition directly. We build a generative model of the process that the albedo of the material produces both the reflectance through imaging and the color labels by color naming. Our model fuses effectively the physical properties of image formation and the top-down information from human color perception. Experimental results show that color naming can improve the performance of intrinsic image decomposition, especially in cleaning the shadows left in reflectance and solving the color constancy problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge